Intel + Baidu Smart Cloud work together to accelerate the intelligent transition in the AI era

At the 2024 Baidu Cloud Intelligence Conference held on September 25-26, 2024, as the co-organizer of the conference, Intel brought a full-stack AI hardware and software solution, and explained in depth how to efficiently deploy and run large language models based on Intel® Xeon® processors and a new generation of cost-effective accelerator cards. It also demonstrated a series of optimization strategies and an open architecture AI software stack (OPEA) for enterprise customers to help enterprises accelerate the implementation of AI and maximize the computing performance of the entire AI infrastructure.

For a long time, Baidu Smart Cloud and Intel have been cooperating deeply in products and technologies, and have achieved remarkable results in cloud computing, big data, PaddlePaddle deep learning framework optimization, vehicle-road collaboration, edge computing and other fields.

Now, follow the editor's steps and take a look at the wonderful highlights of the event!

Intel made a grand appearance at the main forum of the conference, expounding on the business insights and technology prospects of "AI-driven industry core power"

Main Forum Speech

AI drives industry "core power"

"To embrace the new productivity represented by AI, Intel and Baidu have worked together to build modern infrastructure for cloud data centers, continuously optimize software, and deepen their efforts in areas such as green and sustainable development. Based on years of cooperation, the two parties will continue to create diversified solutions through continuous technological innovation in the future to provide customers from all walks of life with better services and experience."

—— Liang Yali

Vice President of Intel Marketing Group

General Manager of Cloud and Industry Solutions and Data Center Sales, China

After the main forum, Intel's special session focused on how to inject "core power" into the AI industry. From advanced technologies and products to cooperative solutions, it comprehensively introduced Intel's AI product hardware and software combination, as well as the latest cooperation results with Baidu Smart Cloud Qianfan Big Model Platform.

Intel Special

-

Opening Remarks

Hu Kai, Internet Industry Director, Intel Cloud and Industry Solutions Group

Different industries have diverse demands for AI computing power, and their requirements for processor frequency, bandwidth, etc. are different. From cloud to edge to end, Intel continues to provide a comprehensive product technology portfolio for the AI industry, and cooperates with a wide range of partners to provide end customers with more economical and diversified computing power support.

-

CPU-based large language model reasoning - Baidu Smart Cloud Qianfan large model platform implementation

Chen Xiaoyu Baidu Intelligent Cloud Senior R&D Engineer

The widespread application of big models in various industries has driven a new round of industrial revolution, and also posed severe challenges to AI computing power. As a leading domestic artificial intelligence cloud service platform, Baidu Smart Cloud Qianfan Big Model Platform provides developers with a rich selection of big models and supporting software tools for model and application development, which can help users build various intelligent applications.

In order to improve the CPU-based LLM reasoning performance, Baidu Smart Cloud introduced the Intel® Xeon® Scalable Processor and used the built-in Intel® Advanced Matrix Extensions Technology, combined with the large model reasoning acceleration solution xFasterTransformer (xFT), to help users achieve CPU-based LLM reasoning acceleration on the Qianfan Large Model Platform . At present, the Qianfan Large Model Platform has output excellent application cases in multiple scenarios such as education, office, and medical care .

-

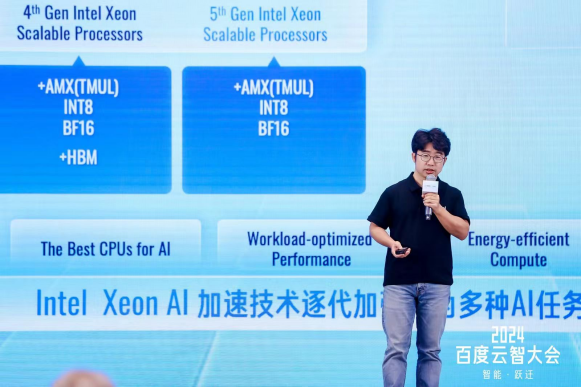

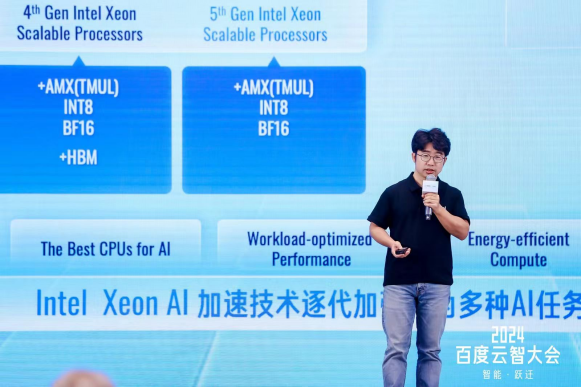

xFT unlocks Xeon® computing power and unleashes AI potential

Miao Jincheng, Senior Software Engineer at Intel

Intel is committed to delivering complete hardware and software solutions in the field of AI. The Xeon® Scalable Processor equipped with the Intel® AI Engine comprehensively improves the performance of AI applications out of the box. The fourth and fifth generation Intel® Xeon® Scalable Processor has an AI accelerator built into it, the Intel® Advanced Matrix Extensions Engine, which consists of a 2D register file (TILE) and TMUL, supports two data types , INT8 and BF16, and can effectively accelerate deep learning training and reasoning workloads.

As a fully optimized open source LLM reasoning framework, xFT supports multi-machine cross-node distributed operation and supports two API interfaces, C++ and Python, making it easier for users to use and integrate it into their own business framework. At the same time, xFT supports a variety of low-precision data types and a variety of mainstream large models on the market.

-

Full-stack implementation of the enterprise GenAI open platform based on retrieval-enhanced generation

Guo Bin, Senior Cloud Computing Software Architect at Intel

Although the RAG technology based on retrieval enhancement is not new, its application potential in enterprises is becoming increasingly prominent as the capabilities of large language models are enhanced. Enterprises face both challenges and opportunities in the implementation of AI. Especially after the generative AI technology exploded the market, the focus of the industry has shifted from model pre-training to specific application implementation.

OPEA (Open Platform for Enterprise AI) is an open source project initiated by Intel and donated to the Linux Foundation. It aims to build an open AI software ecosystem, use generative AI to help enterprises tap into the value of data, effectively help their own business development, and reduce the complexity of the ecosystem to achieve solution scale.

-

Cost-effective solution for large models

Mu Yanfeng, Software Technology Manager, Intel

The development of large models is in full swing. With the rapid growth of model parameter scale, enterprises are increasingly in need of improving computing power. To effectively explore the value of large models, enterprises need to improve system scalability while avoiding single GPU lock-in, and effectively control costs while improving business efficiency.

Intel® Gaudi ® 2 AI accelerator is designed for generative AI and large models, and aims to provide high-performance and efficient generative AI computing capabilities. As a fully programmable high-performance Al accelerator, it integrates many technological innovations, has high memory bandwidth/capacity and horizontal expansion capabilities based on standard Ethernet technology. At the same time, Intel provides an end-to-end AI software stack with the Intel® Gaudi ® Software Suite as the core, which puts the development and deployment of AI models on the fast track.

In addition to the Intel session, Intel technical experts also shared valuable insights on big models and cloud native topics.

Special Forum on Large Model Platform Technology Practice

-

Unlocking the potential of Xeon® —a new choice for large model reasoning

Guo Bin, Senior Cloud Computing Software Architect at Intel

The computing power demand caused by generative AI continues to increase. Faced with problems such as GPU shortages and high prices, companies need to find new options that can continue to provide sufficient computing power for generative AI and have both scalability and availability .

Facing the AI era, the Intel® Xeon® platform continues to update and iterate, adding AI acceleration engines in addition to the continuous improvement of computing power. Using the Intel® Advanced Matrix Extensions provided by Intel® Xeon® Scalable Processors, users can more fully tap the potential of the CPU and more easily obtain the performance required for AI workloads.

Cloud Native Forum

-

Data security in the era of big models: the key role of Xeon® processors and confidential computing

Song Chuan, Chief Engineer of Intel Data Center and Artificial Intelligence Division

In the era of big models, computing power demand is critical, but data security and circulation cannot be ignored. With the continuous expansion of big model applications in various industries, the amount of data is growing explosively. It is crucial to achieve orderly data circulation and strengthen data security protection to promote the continuous advancement of big model technology.

Intel® Xeon® Scalable processors are equipped with a variety of security engines that provide hardware -based confidential computing solutions and better protect data privacy while making full use of data. Among them, Intel® SGX can provide application-level security isolation, while Intel® TDX can provide virtual machine-level security isolation .

Finally, follow the editor’s footsteps to visit the Intel exhibition area “online” and appreciate the charm of the site!

Intel exhibited a wide range of products and solutions around the three hot topics and products of large model optimization and deployment, the latest Intel® Xeon® 6 processor , and AI PC .

Large model optimization and deployment

-

Unlock the potential of Xeon® , a new choice for Qianfan reasoning

-

Secure and Trusted LLM Reasoning Service: Application of Intel® TDX in Heterogeneous Computing

-

LLM Reasoning Solution Based on Arc TM GPU

Intel® Xeon® 6

-

Intel® Xeon® 6 processors provide extreme performance

AI PC

-

OpenVINOTM Toolkit and Intel AI PC Accelerate AIGC Technology and Applications

-

AI PC to play Black Myth: Wukong and various AI applications

Relying on more than ten years of technical cooperation, Intel will continue to work with Baidu Smart Cloud to jointly promote generative artificial intelligence (GenAI) technology innovation and industry implementation through full-stack computing power, powerful AI acceleration capabilities, and a complete end-to-end cooperation ecosystem, turning technical capabilities into productivity and serving the intelligent construction of thousands of industries!

For more information about Intel® Xeon® solutions for AI acceleration, please click " Read original article " to learn more

© Intel Corporation. Intel, the Intel logo, and other Intel trademarks are trademarks of Intel Corporation or its subsidiaries in the U.S. and/or other countries. Other names and brands may be claimed as the property of others.

*Other names and trademarks may be claimed as the property of their respective owners

Want to see more "core" information

Tell us with your

Likes

and

Watching

~

京公网安备 11010802033920号

京公网安备 11010802033920号