Today, we are not talking about hardware, but about the path.

To achieve the goal of autonomous driving, ADAS applications are in full swing, and the demand for microcontrollers, sensor chips, and graphics processors built into various smart cars is increasing, which in turn drives semiconductor manufacturers to launch products that meet automotive standards, have good performance, and are highly integrated.

Automotive supply chain players mobilize to realize the vision of self-driving cars

In the 1993 movie Demolition Man, almost all the cars were self-driving, but of course, they were just concept cars. Today, more than a dozen leading automakers (including Audi, BMW, GM, Tesla, Volkswagen, and Volvo) are developing driverless cars. In addition, Google has developed its own car technology, which recently successfully drove more than 1 million miles (equivalent to the average American adult driving 75 years) without any major accidents.

The prototype of the autonomous concept car has appeared in the movie Superman

The prototype of the autonomous concept car has appeared in the movie SupermanLooking at these impressive results, many people may ask a question: How long will it take before we can actually see driverless cars driving on the road?

To achieve this vision, the complex automotive supply chain consisting of semiconductor companies, system integrators, software developers and automakers, all of which are working closely together to develop key advanced driver assistance system (ADAS) technologies for the first commercial driverless cars. This embedded power IC is designed for mechatronic motor control solutions and is suitable for a variety of motor control applications that require a small form factor (SFF) package and minimal external components.

Introducing three major ADAS design methods

There are currently three main design methods for introducing ADAS functions into automobiles.

One approach is to store a large amount of map data so that the car can use it to navigate in a specific environment. This approach is like letting a train run on invisible tracks. One example of this approach is Google's driverless car, which mainly uses a set of pre-recorded street HD maps for navigation, and rarely uses sensing technology. In this example, the car only relies on high-speed communication connections and sensors, and maintains a stable connection with the cloud architecture to provide the navigation coordinates it needs.

Google's driverless cars use a lot of map data to navigate

Google's driverless cars use a lot of map data to navigateIn contrast, another technology uses computer vision processing with very little reliance on pre-made maps. This approach replicates human driving because the car can make real-time decisions based on multiple built-in sensors and high-performance processors. Such cars usually include multiple cameras that can see far away, and use special high-performance, low-power chips to provide supercomputer-level processing capabilities to execute ADAS software and hardware algorithms.

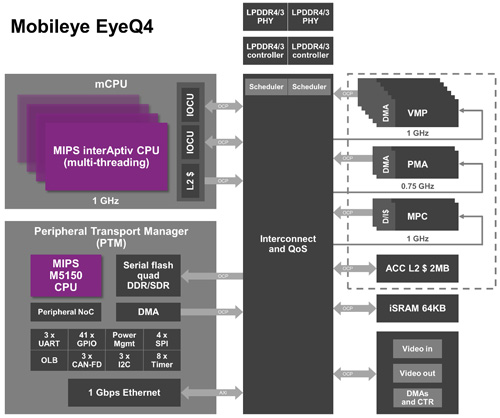

Take Mobileye, for example. The company has been a pioneer in this technology, and its powerful and energy-efficient EyeQ SoC is designed specifically for driverless cars. For example, the MIPS-based Mobileye EyeQ3 SoC is sufficient to support the processing performance required for the highway automatic navigation function recently provided by Tesla electric vehicles. In this case, Mobileye uses a multi-core, multi-threaded MIPS I-class CPU to process the data streams from multiple cameras in the car.

In the figure below, the quad-core, quad-thread interAptiv CPU in the EyeQ4 SoC serves as the brain of the chip, directing the flow of information from the camera and other sensors to the VLIW function blocks on the right side of the chip with a processing speed of up to 2.5TFLOPS.

EyeQ4 SoC features quad-core, quad-thread interAptiv CPU

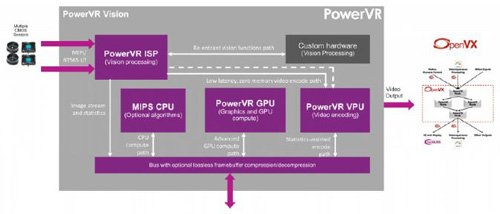

EyeQ4 SoC features quad-core, quad-thread interAptiv CPUFinally, the third trend is to use general-purpose SoC processors to develop driverless cars. For cars that are already equipped with embedded GPUs to support infotainment systems, developers can use the computing resources of the graphics engine to execute various recognition and tracking algorithms for lanes, pedestrians, cars, building facades, parking lots, etc. For example, Luxoft's computer computing and augmented reality solutions use Imagination's PowerVR imaging architecture and additional software to build ADAS functions.

PowerVR imaging architecture enables rapid implementation of a variety of in-car autonomous driving features

PowerVR imaging architecture enables rapid implementation of a variety of in-car autonomous driving features PowerVR Series7 GPU schematic

PowerVR Series7 GPU schematicThe software framework has been optimised for embedded PowerVR hardware, enabling rapid and cost-effective implementation of a wide range of in-car autonomous driving functions.

Self-driving cars will bring unlimited business opportunities

Regardless of the design approach, driverless cars offer a bright future for automakers, sensor suppliers, and the semiconductor industry. These cars will benefit from advanced SoC modules and high-performance microcontrollers with hardware memory management, multithreading, and virtualization capabilities. These features will allow OEMs to build more complex software applications, including model-based process control, artificial intelligence, and advanced visual computing.

Previous article:Smaller and smarter motor controllers advance HEV/EV market

Next article:Achieving Automotive Functional Safety through IP Design

- Popular Resources

- Popular amplifiers

- A new chapter in Great Wall Motors R&D: solid-state battery technology leads the future

- Naxin Micro provides full-scenario GaN driver IC solutions

- Interpreting Huawei’s new solid-state battery patent, will it challenge CATL in 2030?

- Are pure electric/plug-in hybrid vehicles going crazy? A Chinese company has launched the world's first -40℃ dischargeable hybrid battery that is not afraid of cold

- How much do you know about intelligent driving domain control: low-end and mid-end models are accelerating their introduction, with integrated driving and parking solutions accounting for the majority

- Foresight Launches Six Advanced Stereo Sensor Suite to Revolutionize Industrial and Automotive 3D Perception

- OPTIMA launches new ORANGETOP QH6 lithium battery to adapt to extreme temperature conditions

- Allegro MicroSystems Introduces Advanced Magnetic and Inductive Position Sensing Solutions

- TDK launches second generation 6-axis IMU for automotive safety applications

- LED chemical incompatibility test to see which chemicals LEDs can be used with

- Application of ARM9 hardware coprocessor on WinCE embedded motherboard

- What are the key points for selecting rotor flowmeter?

- LM317 high power charger circuit

- A brief analysis of Embest's application and development of embedded medical devices

- Single-phase RC protection circuit

- stm32 PVD programmable voltage monitor

- Introduction and measurement of edge trigger and level trigger of 51 single chip microcomputer

- Improved design of Linux system software shell protection technology

- What to do if the ABB robot protection device stops

- Keysight Technologies Helps Samsung Electronics Successfully Validate FiRa® 2.0 Safe Distance Measurement Test Case

- Innovation is not limited to Meizhi, Welling will appear at the 2024 China Home Appliance Technology Conference

- Innovation is not limited to Meizhi, Welling will appear at the 2024 China Home Appliance Technology Conference

- Huawei's Strategic Department Director Gai Gang: The cumulative installed base of open source Euler operating system exceeds 10 million sets

- Download from the Internet--ARM Getting Started Notes

- Learn ARM development(22)

- Learn ARM development(21)

- Learn ARM development(20)

- Learn ARM development(19)

- Learn ARM development(14)

- Maxwell's Equations 3D Animation

- Some issues with ob6572 chip

- [RVB2601 creative application development] hello world

- MicroPython further improves the file system

- Do you use cmake a lot in practice? Please share your thoughts on using it, having used it, or thinking of using it.

- EEWORLD University Hall----Industrial Robot Seminar

- TMS320C62x Boot Mode

- GPIO block diagram of C6000 series DSP

- [New version of Zhongke Bluexun AB32VG1 RISC-V development board] - 0: Unboxing post - At this point it surpasses "UNO"

- ADCPro and DXP FAQ

LM741WG-MPRX

LM741WG-MPRX

京公网安备 11010802033920号

京公网安备 11010802033920号