DirectsHow application framework completes the underlying work of streaming media processing, so that programmers do not need to worry about how to input data and how to output it after processing, but only need to worry about how to process the input data. The H.264 video codec standard has a high compression ratio and excellent network affinity, and is generally considered to be the most influential streaming video compression standard. A streaming media player that combines Direct-show and H.264 will undoubtedly have excellent performance.

1 Introduction to DirectShow Technology and H.264 Video Compression Standard

Directshow is a streaming media development software package provided by Microsoft. It provides a complete solution for multimedia applications with high performance requirements such as playback of media files in various formats and audio and video acquisition on the Windows platform.

Directshow is a complete COM-based application system located in the application layer. It uses the FilterGraph model to manage the entire data flow processing process. Each functional module involved in data processing is called a Filter. Each Filter is connected to form a "pipeline" in a certain order in the FilterGraph to work together. Filter is a COM component, and its functions can be implemented by the user. DirectShowSDK also provides some standard Filters for users to use. Each Filter is connected to each other through Pin in a certain order in the FilterGraph, and Pin is also a COM object.

H.264 is the latest international video coding standard developed by the joint development group of ITU-T and ISO/IEC. The H.264/AVC video coding standard has significantly improved the coding quality and compression ratio compared with the original video coding standards. At the same visual perception quality, the coding efficiency is about 50% higher than that of H.263, MPEG-2 and MPEG-4. H.264 not only has excellent compression performance, but also has good network affinity. Therefore, H.264 is generally regarded as the most influential streaming video compression standard.

2 System Design Framework

This system is based on the Directshow application framework and H.264 video compression standard, and realizes the function of receiving streaming media data from the network and playing it in real time on the client. The streaming media file is an AVI file encoded with H.264. Since Directshow provides AVISplitteRFilter, AudioDecoder and standard Video/AudioRenderer, this system only needs to design a custom network source filter and H.264 decoding filter.

Multimedia streaming actually involves two aspects of technology. One is the communication technology between the server and the client, including the transmission of multimedia data, command control, etc.; the other is the technology for the client to decode and play the received multimedia stream in real time. Obviously, network communication can use windowssocket technology, and multimedia stream decoding and playback can use direct-show technology. This paper adopts the directshow application framework, designs the network source filter and h.264 decoding filter, and constructs a streaming media player through FilterGraph.

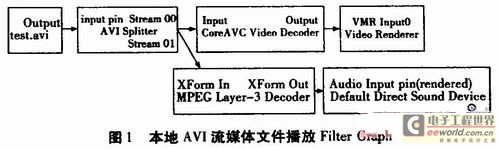

The FilterGraph for playing local H.264-encoded AVI files is shown in Figure 1. Simply replace the local FileSourceFilter with the network source Filter and the CoreAVCVideoDecoder with the h.264 decoding Filter to implement a network H.264 video player.

3 System Design and Implementation

3.1Filter Design Process

The coding implementation of Filter includes Filter registration information, framework function implementation on Filter, logic control class implementation, custom interface implementation, property page implementation, property rights protection, etc.

First, we need to analyze the functions that the Filter needs to complete and its position in the FilterGraph to determine the filter model and select a suitable base class; then, define the input and output PINs and custom interfaces, and register the Filter information; finally, implement all pure virtual functions and custom interface functions of the base class, and rewrite the related functions of the base class to customize the filter functions.

3.2 Design of Network Source Filter

The main function of the source filter is to receive streaming media data from the server and provide it to other filters in the FilterGraph.

Since the AVIsplitter that comes with directshow works in pull mode, the source Filter also works in pull mode.

This source filter uses double buffer circular queue technology to receive data and pass data to the next level filter. The reasons for using this technology are as follows:

(1) During the connection between SourceFilter and SplitterFilter, a portion of data will be read from SourceFilter to obtain the format description of the data, otherwise FilterGraph cannot complete the connection. Before the source filter is connected to SplitterFilter, a waiting thread should be started. When the data cache of SourceFilter has received enough data in advance, the complete FilterGraph will be constructed.

(2) When the complete FilterGrapH is built and is in operation, SourceFilter must dynamically receive data and continuously provide new data to SplitterFilter. The double-buffered circular queue can fully utilize the memory space and provide a stable data source for SplitterFilter.

(3) The buffer queue can stabilize the bit rate and effectively reduce the impact of network delay, congestion and jitter.

The working process of the source filter is as follows: establish a circular buffer queue, the queue tail pointer is used to buffer the data received from the network, and the queue head pointer is used for the Splitter to read the data, separate the audio and video, and pass it to the next level Decoder for processing; when the Socket receives the network data, the data is inserted into the tail of the queue and the tail pointer is moved backward; when the Splitter needs to read data, the data is read from the head of the queue and the head pointer is moved backward.

Streaming media transmission adopts the client/server architecture. There is also a Socket communication protocol problem between the server and the client. Since streaming media is continuous, its synchronization point cannot be selected arbitrarily. Therefore, in order to transmit streaming media data, a connection-oriented reliable transmission protocol (TCP) must be used. The control and feedback messages between the client and the server can be transmitted using (UDP). The server first creates a listening Socket to listen for connection requests from the client. Once the client's request is heard, the server creates a Socket for data transmission and binds it to the client requesting a connection. At this time, the server is ready for data transmission. When the client issues a command, the server performs corresponding operations according to the type of command, such as data sending, stopping, disconnecting, etc.

On the server side, the continuous H.264 stream is first divided into small packets of payload data, and a header is added to send them using the TCP protocol; on the client side, the payload data of the small packets is assembled according to the header description, and then H.264 decoding and playback is performed. The Socket data transmission structure is: payload type (8bit), payload data length (16bit) and payload data packet (2324Byte).

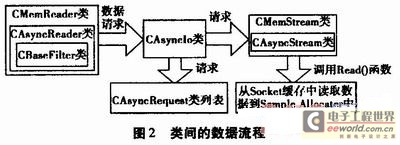

The client implementation can refer to the MEMFileFilter in the SDK. Copy the four files asyncrdr.cpp, asyncrdr.h, asyncio.cpp, and asyneio.h directly from the SDK example, and then derive the stream processing class CMemStream from CAsyncStream in the MemFilter.h file to customize the data source and perform data reading operations; derive the filter class CMemReader from CAsyncReader, implement the SourceFilter framework with 1 output PIN, and complete the connection with SplitterFilter.

The data flow between network source filter classes is shown in Figure 2, and the data flow of the "pull" thread of the next level Filter is shown in Figure 3. Among them: CAsyneStream is used to mark the data flow; CAsyneRequest marks the input and output requests; CAsyncIo implements the control of data input and output. The programming mainly implements the CMemStream: Read() function to read data from a specific data source to the Sample of the next level Filter.

This filter works between Splitter and Rendererfilter, contains one input and one output, and the media types on the input and output pins are inconsistent, so CTransformFilter is selected as the base class and the subclass CX264Decode is derived.

ffmpeg is a complete open source solution that integrates recording, conversion, audio/video encoding and decoding functions. It is a good way to use the libavformat and libavcodec libraries in ffmpeg to access most video file formats. The h.264 decoding operations in ffmpeg are encapsulated as C++ classes for the H.264 decoding filter to call to implement the decoding function.

The pure virtual functions that the subclass CX264Decode must implement are: CheckInputType() to implement the media type check on the input Pin; CheckTransform() to check whether the transformation operation from the input pin to the output pin is supported; DecideBufferSize() determines the size of the Sample memory; GetMediaType(): provides the preferred media type on the output Pin; Transform(): implements decoding transformation.

The optional overridden virtual functions of the subclass CX264Decode are: initialization and deinitialization of the stream: StartStreaming() and StopStreaming(); response to quality notification messages: AlterQuality(); obtain the time when the media type is actually set: SetMediaType(); obtain additional interfaces when connecting: CheckConnect(), BreakConnect() and CompleteConnect(); customize the transformation process: Receive(); standardize the output sample setting: InitializeOutputSample().

The main process of this Filter design is as follows:

(1)Filter registration information

Define the class factory template and fill in the registration information of the Filter; at the same time, implement the registration and unregistration functions that must be implemented by the DLL file: DLLRegisterServer and DLLUnregisterSe-rver.

(2) Implementation of framework functions

Implement the function CreateInstanee to create a Filter object instance; implement the function CheckInputType to check the media type on the input Pin; implement the function Transform for H.264 decoding operations; implement the function NonDelegafingQueryIntefface to expose the custom interface supported by the Filter so that the application can set the parameters of the decoder; implement the function CompleteConnect to obtain the media type description after the input Pin is successfully connected, and set the format of the input data to the application logic control object; implement the functions StartStreaming and StopStreaming to call the corresponding functions of the application control logic object respectively to perform initialization and deinitialization operations on stream processing.

(3) Implementation of logic control class

The logic control class is the core of this filter design. The related operations of the framework function and the custom interface function are all delegated to the logic control class. This class implements the setting of H.264 decoding parameters and decoding operations, mainly calling the C++ class that encapsulates H.264 decoding to achieve.

When using the ffmpeg library for H.264 decoding, you need to customize two key functions: open_net_file() and read_buffer_frame(). Among them, the open_net_file() function completes the connection with the streaming server, receives the stream-related information of the specified streaming file, and returns a value of the AVFormatContext structure type. The read_buffer_frame() function reads a frame of data from the buffer and passes it to the library function avcodec_decode_video() for decoding.

(4) Implementation of custom interface

Derive the Filter class from the custom interface class and declare all interface methods of the custom interface; then implement these interface methods in the implementation file of the Filter class, which are mostly calls to the corresponding functions of the application logic control class; finally, expose our custom interface in the function NonDelegatingQueryInteRFace.

3.4 Player Design

The player uses the DirectsHow application framework and adopts a three-layer programming method that separates the interface, control logic, and data. The interface class is directly derived from the standard dialog class CDialog, and two custom classes are defined in the interface class: the data encapsulation class and the logic control class. The interface class expresses a user operation logic; the data encapsulation class centrally manages various parameters; the logic control class specifically implements the business application logic. Using the Directshow application framework, you don't have to worry about how data is input and output, as these issues are all taken care of by the Filter framework. You only need to pay more attention to issues such as the algorithm implementation and efficiency optimization of data processing. The design structure that separates the interface, control logic, and data not only makes the program framework clear and easy to understand, but also has good portability and scalability.

The player builds a FilterGraph containing a network source filter and an H.264 decoding filter based on FilterGraphManager to play network streaming media. The interface class implements the interaction between the video display interface and the user's operation. The control logic can control the video playback, pause, stop, decoding parameter settings, etc. The data class implements the operation management of data.

4 Conclusion

This streaming media player system adopts the Directshow application framework and the H.264 video codec standard, which can well realize the playback of network streaming media videos. It has a clear structure, good scalability and portability. Since the WINCE system is an embedded real-time operating system provided by Microsoft, which provides application support for Directshow, this system can also be well transplanted to the win-ce embedded application platform to realize the streaming media video playback on the embedded end. At the same time, this streaming media player system can also be well transplanted to the video on demand system because it adopts the H.264 video codec standard with high compression ratio and low bit rate. It can effectively reduce the network load and bandwidth, thereby reducing the impact of network on packet loss, jitter, delay, etc. in streaming media transmission.

Previous article:EPCOS MEMS microphone solution for electromagnetic interference resistance

Next article:Audio processing algorithms improve speaker sound quality

- Popular Resources

- Popular amplifiers

- High signal-to-noise ratio MEMS microphone drives artificial intelligence interaction

- Advantages of using a differential-to-single-ended RF amplifier in a transmit signal chain design

- ON Semiconductor CEO Appears at Munich Electronica Show and Launches Treo Platform

- ON Semiconductor Launches Industry-Leading Analog and Mixed-Signal Platform

- Analog Devices ADAQ7767-1 μModule DAQ Solution for Rapid Development of Precision Data Acquisition Systems Now Available at Mouser

- Domestic high-precision, high-speed ADC chips are on the rise

- Microcontrollers that combine Hi-Fi, intelligence and USB multi-channel features – ushering in a new era of digital audio

- Using capacitive PGA, Naxin Micro launches high-precision multi-channel 24/16-bit Δ-Σ ADC

- Fully Differential Amplifier Provides High Voltage, Low Noise Signals for Precision Data Acquisition Signal Chain

- Innolux's intelligent steer-by-wire solution makes cars smarter and safer

- 8051 MCU - Parity Check

- How to efficiently balance the sensitivity of tactile sensing interfaces

- What should I do if the servo motor shakes? What causes the servo motor to shake quickly?

- 【Brushless Motor】Analysis of three-phase BLDC motor and sharing of two popular development boards

- Midea Industrial Technology's subsidiaries Clou Electronics and Hekang New Energy jointly appeared at the Munich Battery Energy Storage Exhibition and Solar Energy Exhibition

- Guoxin Sichen | Application of ferroelectric memory PB85RS2MC in power battery management, with a capacity of 2M

- Analysis of common faults of frequency converter

- In a head-on competition with Qualcomm, what kind of cockpit products has Intel come up with?

- Dalian Rongke's all-vanadium liquid flow battery energy storage equipment industrialization project has entered the sprint stage before production

- Allegro MicroSystems Introduces Advanced Magnetic and Inductive Position Sensing Solutions at Electronica 2024

- Car key in the left hand, liveness detection radar in the right hand, UWB is imperative for cars!

- After a decade of rapid development, domestic CIS has entered the market

- Aegis Dagger Battery + Thor EM-i Super Hybrid, Geely New Energy has thrown out two "king bombs"

- A brief discussion on functional safety - fault, error, and failure

- In the smart car 2.0 cycle, these core industry chains are facing major opportunities!

- The United States and Japan are developing new batteries. CATL faces challenges? How should China's new energy battery industry respond?

- Murata launches high-precision 6-axis inertial sensor for automobiles

- Ford patents pre-charge alarm to help save costs and respond to emergencies

- New real-time microcontroller system from Texas Instruments enables smarter processing in automotive and industrial applications

- Consulting employment

- [Runhe Neptune Review] Four network communications

- EEWORLD University Hall----What can universal fast charging bring?

- Complete learning manual for using RT-Thread on RISC-V (based on Longan development board)

- How does Tbox protect itself to ensure data security? What good methods can you recommend?

- Byte/word alignment issues in DSP

- Pre-registration for the live broadcast with prizes: Cytech & ADI discuss with you Gigabit digital isolators for video, converters, and communications

- GPIO Operation of TMS320C6748

- Gigabit Ethernet Courseware.zip

- 1. “Wanli” Raspberry Pi car - Establishing a project warehouse

EL8170ISZ-T7

EL8170ISZ-T7

京公网安备 11010802033920号

京公网安备 11010802033920号