Nowadays, cameras can be seen everywhere, in factories, vehicles, public buildings, streets...the number is still increasing. Most cameras rely on image sensors to convert light in a scene into electronic images, driving up demand for image sensors . However, there are many types of image sensors with different functional characteristics. Designers need to be familiar with the different functional characteristics of different sensors in order to choose the appropriate camera for a specific application.

The number of cameras installed in passenger cars has surged, and some luxury models are even equipped with more than a dozen cameras. Automakers are challenged as they need to add more sensors to improve safety, while also considering the economics and space required of each camera. As a result, automakers began looking for solutions, hoping to use a single camera to capture images optimized for both human vision and machine vision. There are differences in the image quality applicable to human vision and machine vision, which require trade-offs, so implementing this method is also difficult.

human vision

The human visual system perceives differences in brightness between pixels differently from machine vision algorithms. The human eye's perception of brightness is non-linear, that is to say, if the number of photons in the environment doubles, the brightness perceived by the eyes only doubles. This requires adjusting the camera image used for human vision to correspond to its dynamic range, thereby fully amplifying details in brighter and darker areas perceived by the human eye. Additionally, we are very sensitive to color in general and flicker from LED light sources (an increasingly common problem), so if a camera causes color distortion, it can affect the human visual experience even if the image is clear and otherwise of high quality. For passive safety systems that assist driving, such as rearview cameras, the driver also has an advantage over machine vision systems, because if there is a defect in the image, the driver will automatically detect it without relying on the camera. Although this will not cause a major safety incident, the loss of usefulness of the camera will also cause inconvenience. Therefore, the driver will not rely on the camera image and will make more active judgments.

machine vision

Unlike human vision, automated systems using machine vision look at the digital value of each pixel in an image, so they respond linearly to the number of photons. Unlike the image used for human vision, it must be adjusted to output an image that corresponds to the measured pixel values. Additionally, machine vision systems must be programmed or use special error detection hardware to detect image defects. Systems lacking this hardware may not function properly and may not notify the driver that their functionality is impaired or inoperable. For active safety systems like automatic emergency braking, if a fault occurs, false alarms will cause the system to apply the brakes when there is no danger of collision, while false alarms will cause the system to completely fail when a danger occurs, causing serious consequences. If the driver uses such an assistance system, a message will need to be displayed to indicate that it is not fully functional, but a warning of impaired functionality may not be given. Some systems alert the driver that their functionality is impaired or "unavailable," often relying on special hardware features to detect errors or faults in sensors. Such functions must comply with relevant industry standards such as Automotive Safety Integrity Level (ASIL). Sensors that support ASIL will have the function of detecting and reporting faults, which can improve safety. These are two reasons why sensors used for machine vision and sensors used for human vision need to be configured differently.

120 degree field of view sensor RYYCy image, color processed

Sensor solutions for observation and sensing using a single camera

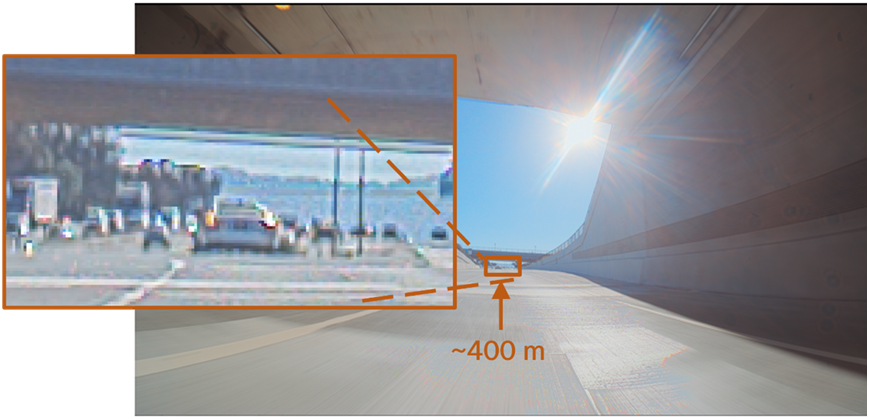

The good news is that some sensors already have excellent functions for both human eyes and machine vision, and can be optimized and output two simultaneous data streams, helping engineers design camera systems that can be used for both human and machine vision functions. This allows automakers to deploy just one camera at a specific location in the vehicle, minimizing space and system cost, and obtaining images optimized for both workload applications.

Previous article:SmartSens launches new CIS products for security, automotive electronics, mobile phones and machine vision

Next article:最后一页

Recommended ReadingLatest update time:2024-11-15 05:53

- Mir T527 series core board, high-performance vehicle video surveillance, departmental standard all-in-one solution

- Akamai Expands Control Over Media Platforms with New Video Workflow Capabilities

- Tsinghua Unigroup launches the world's first open architecture security chip E450R, which has obtained the National Security Level 2 Certification

- Pickering exhibits a variety of modular signal switches and simulation solutions at the Defense Electronics Show

- Parker Hannifin Launches Service Master COMPACT Measuring Device for Field Monitoring and Diagnostics

- Connection and distance: A new trend in security cameras - Wi-Fi HaLow brings longer transmission distance and lower power consumption

- Smartway made a strong appearance at the 2023 CPSE Expo with a number of blockbuster products

- Dual-wheel drive, Intellifusion launches 12TOPS edge vision SoC

- Toyota receives Japanese administrative guidance due to information leakage case involving 2.41 million pieces of user data

- LED chemical incompatibility test to see which chemicals LEDs can be used with

- Application of ARM9 hardware coprocessor on WinCE embedded motherboard

- What are the key points for selecting rotor flowmeter?

- LM317 high power charger circuit

- A brief analysis of Embest's application and development of embedded medical devices

- Single-phase RC protection circuit

- stm32 PVD programmable voltage monitor

- Introduction and measurement of edge trigger and level trigger of 51 single chip microcomputer

- Improved design of Linux system software shell protection technology

- What to do if the ABB robot protection device stops

- CGD and Qorvo to jointly revolutionize motor control solutions

- CGD and Qorvo to jointly revolutionize motor control solutions

- Keysight Technologies FieldFox handheld analyzer with VDI spread spectrum module to achieve millimeter wave analysis function

- Infineon's PASCO2V15 XENSIV PAS CO2 5V Sensor Now Available at Mouser for Accurate CO2 Level Measurement

- Advanced gameplay, Harting takes your PCB board connection to a new level!

- Advanced gameplay, Harting takes your PCB board connection to a new level!

- A new chapter in Great Wall Motors R&D: solid-state battery technology leads the future

- Naxin Micro provides full-scenario GaN driver IC solutions

- Interpreting Huawei’s new solid-state battery patent, will it challenge CATL in 2030?

- Are pure electric/plug-in hybrid vehicles going crazy? A Chinese company has launched the world's first -40℃ dischargeable hybrid battery that is not afraid of cold

- LMV321 power supply range problem

- [SAMR21 New Gameplay] 29. WS2812 Application - Rainbow

- FPGA Learning Notes-----FPGA Competition Adventure

- Gaoyun GW1N development board LED and clock pin definition files and some suggestions for Gaoyun

- 36 pictures to explain the basics of network

- keil4 cannot compile

- Several filtering methods for single chip microcomputer to resist interference by software

- Please help me analyze the processing circuit of this AC signal

- DSP chip, what is a DSP chip

- What do you think of Huawei HiSilicon's operation?

ESP32-S3 source code

ESP32-S3 source code Sensor Principles and Applications (Yan Xin)

Sensor Principles and Applications (Yan Xin)

京公网安备 11010802033920号

京公网安备 11010802033920号