Cheyun Note: Tesla's autonomous driving accident in May and the recent demonstration of how to interfere with sensors at Defcon fully illustrate the importance of sensors in autonomous driving: environmental perception is the basis for the realization of autonomous driving. If the surrounding environment cannot be perceived correctly, then the subsequent cognition, decision-making and control are all castles in the air.

From the perspective of sensor technology development itself, in order to ensure that self-driving cars can be safely on the road in the next decade, the hardware performance and the software algorithms behind it, as well as the data fusion between different sensors, are all areas that need to be improved.

Various sensors on vehicles

Various sensors on vehicles

The importance of sensors in autonomous driving is self-evident.

Tesla's Autopilot system uses the fusion data of cameras, millimeter-wave radars and ultrasonic radars to control the vehicle's driving on highway lanes, change lanes, and adjust speed according to traffic conditions.

Google's fully autonomous driving test car uses LiDAR (laser radar), an expensive and complex long-range sensing system.

Toyota has revealed that their highway self-driving car has 12 sensors: 1 front camera hidden in the rearview mirror, 5 radars to measure the speed of surrounding vehicles, and 6 lidars to detect the location of surrounding objects5.

Although some companies have taken a different approach and hope to use V2X technology to complete environmental perception, V2X is heavily dependent on infrastructure, while sensors are not subject to this limitation.

LiDAR

LiDAR systems use a rotating laser beam. BMW, Google, Nissan and Apple's self-driving test cars use this technology. But for it to be used in mass-produced cars, the price must drop significantly. The industry generally believes that this goal can be achieved in a few years.

How LiDAR works (Image courtesy of Velodyne)

How LiDAR works (Image courtesy of Velodyne)

The working principle of LiDAR is to transmit and receive laser beams. Inside, each set of components contains a transmitting unit and a receiving unit. The Velodyne in the picture above uses a rotating mirror design.

This transmitter/receiver assembly is combined with a rotating mirror to scan at least one plane. The mirror not only reflects the light emitted by the diode, but also reflects the reflected light back to the receiver. By rotating the mirror, a viewing angle of 90 to 180 degrees can be achieved, and the complexity of system design and manufacturing is greatly reduced because the mirror is the only moving mechanism.

Pulse light was previously used to detect distance. The principle of detecting distance is based on the time it takes for light to return. A laser diode emits pulse light, which reflects part of the light back after hitting the target. A photon detector is installed near the diode, which can detect the returning signal. By calculating the time difference between emission and detection, the distance of the target can be calculated. Once activated, the pulse distance measurement system can collect a large number of point clouds.

If there is a target in the point cloud, the target will appear as a shadow in the point cloud. The distance and size of the target can be measured through this shadow. The point cloud can be used to generate a 3D image of the surrounding environment. The higher the point cloud density, the clearer the image.

There are a few different ways to use LiDAR to generate a 3D image of your surroundings.

One way to achieve this is to move the transmit/receive assembly up and down while rotating the mirror, which is sometimes called "blinking and nodding". This method can generate a point cloud in the height direction, but it reduces the azimuth data points, so the point cloud density will be reduced and the resolution will not be high enough.

Another approach is called flash LiDAR. This approach uses a 2D focal plane array (FPA) to capture pixel distance information while emitting lasers to illuminate a large area. This type of sensor is complex and difficult to manufacture, so it has not yet been widely used commercially. However, it is a solid-state sensor with no moving parts, so it may replace existing mechanical sensors in the future.

Although there are different LiDAR structures that can generate many forms of 3D point cloud systems, no system can meet the application requirements of autonomous driving navigation. For example, there are many systems that can generate exquisite images, but it takes several minutes to generate an image. Such systems are not suitable for mobile sensing applications. There are also some optical scanning systems with high refresh rates, but the viewing angle and detection distance are too small. There are also some single beam systems that can provide useful information, but if the target is too small or beyond the viewing angle, it cannot be detected.

In order to maximize the use of LiDAR sensors, it is necessary to be able to see all the surroundings, that is, to achieve a 360-degree view; the data output to the user must be real-time, so the time delay between data collection and image generation must be minimized. The driver's reaction time is generally a few tenths of a second. If we want to achieve autonomous driving navigation, the refresh rate of the navigation computer must be at least one tenth of a second. The perspective cannot only be horizontal, but also requires a height perspective, otherwise the car will fall into the pit on the road. The vertical perspective should be as close to the vehicle as possible downward to allow autonomous driving to adapt to bumps and steep slopes on the road.

At present, there are several companies in the industry that produce and manufacture lidar, and their products have their own characteristics.

Velodyne has high-precision lidar technology (HDL), and its HDL sensor is said to be able to provide 360-degree horizontal viewing angle, 26.5-degree vertical viewing angle, 15Hz refresh rate, and can generate a point cloud of one million pixels per second. This year, Velodyne launched a miniaturized 32-line sensor that can achieve a detection distance of 200 meters and a vertical viewing angle of 28°.

Velodyne solid-state 32-line Ultra Pack LiDAR

Velodyne solid-state 32-line Ultra Pack LiDAR

Leddar also has lidar products with 360° detection capabilities. And because it also provides ADAS solutions, Leddar also provides sensor fusion technology, combining data from different sensors to form an overall image of the vehicle's surroundings.

• Solid-state LiDAR – as an alternative or complement to cameras/radars, and can be integrated into ADAS and autonomous driving functions;

• LiDAR that provides high-density point clouds for high-level autonomous driving;

• LiDAR that can support light scanning or beam measurement (e.g. MEMS mirrors);

Ledaar's lidar has a detection distance of up to 250m, a horizontal viewing angle of 140 degrees, can generate a 480,000-pixel point cloud per second, and a horizontal and vertical resolution of up to 0.25 degrees.

Vision Image Sensor

A widely used application now is to combine 2D lidar with visual sensors. However, compared with lidar, the low cost of visual sensors also makes them indispensable in autonomous driving solutions.

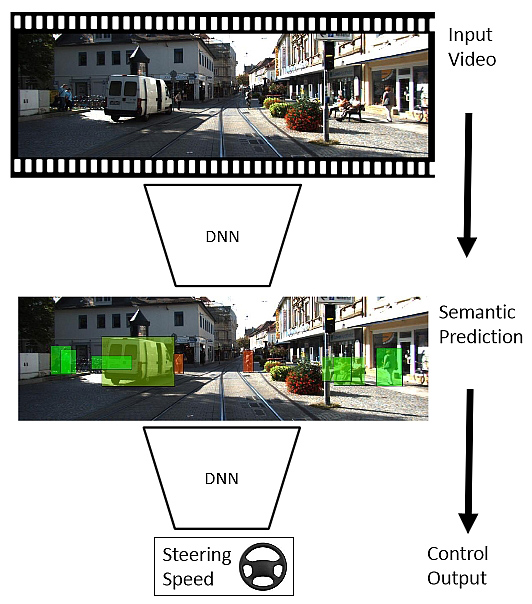

Image recognition technology of visual sensors is used to perceive the surrounding environment. For autonomous driving, in addition to knowing what objects/pedestrians are located at what locations, and then issuing instructions such as slowing down and braking to the vehicle to avoid accidents, this function is based on image recognition, can understand the current driving scenario, and learn to deal with emergencies.

Vision sensor workflow

Vision sensor workflow

If the difficulty of lidar lies in how to make its performance meet the needs of autonomous driving navigation, then the difficulty of cameras lies in the process of elevating perception to cognition .

To use the human eye as an analogy, after seeing a pedestrian or a vehicle, a human driver will predict the pedestrian or vehicle's next move based on what they see, and control the vehicle based on the prediction. Self-driving cars also need this "prediction" process, and the camera plays the role of observation. Self-driving cars must be able to observe, understand, model, analyze, and predict the behavior of people inside the car, pedestrians outside the car, and people near the car.

This process from observation to prediction also applies to other vehicles on the road. However, how to obtain the overall meaning of the driving scene, how to deal with sudden scenes and targets, how to accurately perform short-term or long-term behavior analysis for specific targets (pedestrians or vehicles), and how to predict the behavior of surrounding people or vehicles and make decisions, these technologies need further in-depth research.

Car Cloud Summary

Research and engineering of sensors and algorithms are developing rapidly, enabling autonomous vehicles to predict uncertain behaviors of people and vehicles and respond quickly to avoid loss of vehicles, property, and more importantly, to protect precious lives.

Of course, the sensors for autonomous driving are not limited to lidar and cameras. There are also millimeter-wave radar, ultrasonic radar, and sound sensors that are not currently used for autonomous driving. The next article will continue to introduce them.

Previous article:Analysis of three mainstream sensors for smart car environmental sensing

Next article:Analysis of three mainstream sensors for smart car environmental sensing

- Popular Resources

- Popular amplifiers

- A new chapter in Great Wall Motors R&D: solid-state battery technology leads the future

- Naxin Micro provides full-scenario GaN driver IC solutions

- Interpreting Huawei’s new solid-state battery patent, will it challenge CATL in 2030?

- Are pure electric/plug-in hybrid vehicles going crazy? A Chinese company has launched the world's first -40℃ dischargeable hybrid battery that is not afraid of cold

- How much do you know about intelligent driving domain control: low-end and mid-end models are accelerating their introduction, with integrated driving and parking solutions accounting for the majority

- Foresight Launches Six Advanced Stereo Sensor Suite to Revolutionize Industrial and Automotive 3D Perception

- OPTIMA launches new ORANGETOP QH6 lithium battery to adapt to extreme temperature conditions

- Allegro MicroSystems Introduces Advanced Magnetic and Inductive Position Sensing Solutions

- TDK launches second generation 6-axis IMU for automotive safety applications

- LED chemical incompatibility test to see which chemicals LEDs can be used with

- Application of ARM9 hardware coprocessor on WinCE embedded motherboard

- What are the key points for selecting rotor flowmeter?

- LM317 high power charger circuit

- A brief analysis of Embest's application and development of embedded medical devices

- Single-phase RC protection circuit

- stm32 PVD programmable voltage monitor

- Introduction and measurement of edge trigger and level trigger of 51 single chip microcomputer

- Improved design of Linux system software shell protection technology

- What to do if the ABB robot protection device stops

- Analysis of the application of several common contact parts in high-voltage connectors of new energy vehicles

- Wiring harness durability test and contact voltage drop test method

- From probes to power supplies, Tektronix is leading the way in comprehensive innovation in power electronics testing

- From probes to power supplies, Tektronix is leading the way in comprehensive innovation in power electronics testing

- Sn-doped CuO nanostructure-based ethanol gas sensor for real-time drunk driving detection in vehicles

- Design considerations for automotive battery wiring harness

- Do you know all the various motors commonly used in automotive electronics?

- What are the functions of the Internet of Vehicles? What are the uses and benefits of the Internet of Vehicles?

- Power Inverter - A critical safety system for electric vehicles

- Analysis of the information security mechanism of AUTOSAR, the automotive embedded software framework

- 【TI recommended course】#TI millimeter wave radar technology introduction#

- The best way to teach yourself electronics

- A brief discussion on air gap and leakage inductance

- 【GD32L233C-START Review】-3. Successful establishment of Keil development environment and DEMO routine test

- MSP432 MCU takes advantage of real-time operating systems

- M12 Gigabit Ethernet Pinout

- The Impact of IoT on Energy Efficiency

- [ESK32-360 Review] + A/D Conversion and Application

- Last three days! Free evaluation of Nanxin SC8905! Intimately match I2C tools, power supply to play ~

- Registration for the STM32 National Seminar is now open | "14+1" online and offline technical feast, with great gifts!

MCP6541T-I/MS

MCP6541T-I/MS

京公网安备 11010802033920号

京公网安备 11010802033920号