On August 22, 2022, at the 2022 Second China Automotive Human-Computer Interaction Innovation Conference hosted by Gasgoo, Chen Zhi, deputy director of the Intelligent Connected Vehicle Software Technology Research Institute of Wuhan Kotei Information Technology Co., Ltd., shared Kotei Information's exploration and practice in the design principles of smart cockpit 3D HMI based on the Kotei smart cockpit 3D HMI prototype case.

Chen Zhi said that with the gradual evolution of hardware platforms, the 3D design of cockpit HMI is a gradual evolution process, which needs to gradually move from car models, 3D components, 3D backgrounds, voice assistants, and intelligent driving information to the overall 3D scene. He believes that "3D UI cannot be limited to a single screen experience, but needs to be coordinated with other interactive methods and devices to bring about the integration of virtual and physical space."

Chen Zhi, Vice President of Intelligent Connected Vehicle Software Technology Research Institute, Wuhan KOTEI Information Technology Co., Ltd.

The following is a summary of the speech:

KOTEI Intelligent Cockpit 3D HMI Exploration Case

With the development of cockpit chip computing power, the redefinition of cars driven by software, and the maturity of 3D HMI development platforms, the 3D development of automotive software cockpits is moving towards implementation. As the level of autonomous driving capability gradually improves, the attention of drivers and passengers can be gradually released, so different 3D interface HMIs can be designed for different driving scenarios.

The implementation of the 3D HMI for smart cockpits is not achieved overnight, but follows a gradual development path. Previously, many mass-produced models have been presented with 3D car models, and they interact with IVI and instrument interfaces in the form of 3D components. Later, 3D backgrounds also appeared, that is, the background of the entire cockpit was presented in 3D; in addition, there are intelligent voice interactive assistants and 3D intelligent driving information presented by 3D characters. However, the industry has not yet tried the design of a fully 3D interactive smart cockpit.

Focusing on the idea of turning the overall smart cockpit interaction and experience into a three-dimensional scene, KOTEI launched the smart cockpit full 3D HMI prototype project, aiming to create a fully immersive full 3D scene and realize various functions of the car body through 3D scene interaction.

The team envisioned four themes of spring, summer, autumn and winter, covering the homepage, music, navigation and body settings. We constructed four islands, based on which we switched from one shot to the next, and created four immersive scenes based on the four themes of spring, summer, autumn and winter.

Image source: Wuhan Guangting

The homepage uses a spring theme. Three-dimensional elements are used in the scene to carry two-dimensional interactions, including news messages, navigation information, body control, etc. Each scene includes simple body control and voice assistant.

Image source: Wuhan Guangting

The summer theme is mainly entertainment. With the beach as the scene, an immersive music playback mode can be achieved. We also designed a lighthouse element, which can be used to adjust the day and night modes of the HMI.

Image source: Wuhan Guangting

The autumn-themed scene is an open-air cinema, which carries navigation information. Through the setting of the destination, the screen of the cinema is used as a presentation platform for the navigation map.

Image source: Wuhan Guangting

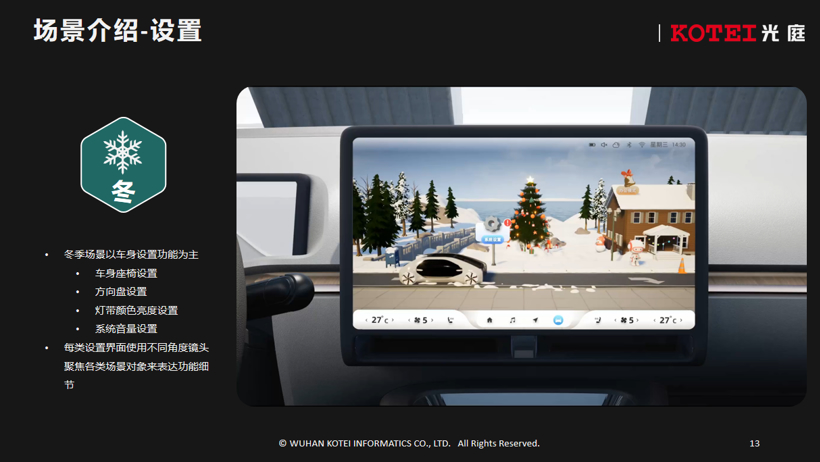

The winter scene is mainly based on the car body setting. The seats, steering wheel, and ambient light configuration can be set through the small panel. The car body, car model and scene interact to create an immersive experience.

In order to better adapt to the car machine, in addition to the PC version, Kotei also launched a simplified 3D HMI design solution for the car machine. Based on this set of low-poly color 3D solutions, a relatively stable platform performance of nearly 60 frames can be achieved, and the overall design concept is also restored.

Image source: Wuhan Guangting

Mercedes-Benz Smart Elf was released in early April this year, and a set of 3D UI designs were released at the same time. Before that, 3D element design was mostly used for instrument map experience or intelligent driving information presentation in mass-produced cars, and was rarely used in entertainment systems. Therefore, Smart Elf took a small step in 3D UI interaction. This set of HMI has not yet realized full-scene 3D HMI, and is still a lightweight combination of 2D interaction + 3D elements.

Not long ago, Geely Geometry M6 released its cockpit design, which constructed a three-dimensional visual effect based on the form of a room. Although the visual effect was ultimately achieved in a two-dimensional way, the overall design concept of three-dimensional space was still reflected.

Thinking about the design principles of 3D HMI experience in smart cockpit

Image source: Wuhan Guangting

In the production process of 3D HMI, there are three ways to combine 2D elements and 3D elements: superimposed combination, partitioned combination and full split-screen combination.

Image source: Wuhan Guangting

Superposition fusion can be seen in the spring scene, where many 2D elements use some carriers in the 3D scene, presenting in a way that is not completely separated from the 3D scene. However, this form will bring some derivative problems, such as the synchronization of motion transformation. Once the lens space changes, will the 2D elements follow the 3D elements or not? At the same time, the 3D scene will also interfere with the 2D interactive elements.

Partitioning the screen is another consideration. Two-dimensional elements are placed on one piece and three-dimensional elements are placed on another piece. This method is generally suitable for long screens with long display areas. Otherwise, it will only be a presentation of three-dimensional components rather than a fully three-dimensional cockpit presentation.

If you want to achieve full three-dimensional, you can refer to the central control design of the Great Wall Mocha model, which has two screens: a control screen and an entertainment screen. The interactive function can be placed on the control screen, and the other screen can give the three-dimensional HMI more room to play.

We believe that only by separating 3D from 2D can the advantages of 3D be brought into full play. The interaction in the car computer is still mainly 2D. The advantage of 3D is not interaction, but emotional expression, scene-based interpretation and driving entertainment.

Image source: Wuhan Guangting

The entire 3D scene can bring better visual and emotional transmission to users. We make such scenes with wind, music rhythm changes, and the overall environment can also be changed. This immersive performance can maximize the ability of 3D interactive UI to transmit emotions. For example, you can also put a cup of coffee in the 3D HMI screen, collect the power of the entire car during driving, see whether the driving is stable, and score the driver, creating a more interesting car body interactive experience.

Image source: Wuhan Guangting

Another point to consider in 3D is the camera movement. Flexible changes and seamless transitions are the advantages of 3D interfaces, but the rapid camera movement in 3D scenes can also cause dizziness for some users. Therefore, the scheduling of the screen also needs to be optimized, such as avoiding large-scale rapid transitions of subjective lenses and performing small-scale movements of 3D components.

In general, 3D interfaces currently face the following challenges: How to avoid dizziness? At the same time, how to design an experience that goes beyond the interactive function level? How to achieve experience design in device collaboration and human-machine co-driving scenarios? Pure control panel interaction is not the strong point of 3D interfaces. More consideration needs to be given to how to achieve immersion and emotional delivery. In the future, 3D UI cannot be limited to a single screen experience, but needs to be coordinated with other interactive methods and devices to bring about the integration of virtual and physical space.

Introduction to the technical capabilities of Kotei cockpit 3D engine

KOTEI's business covers multiple areas, including automotive electronic software, but the research, design and testing of smart cockpits is a very important part of our company's business. KOTEI currently has two related laboratories: the User Experience Laboratory and the Digital Experience Laboratory. The User Experience Laboratory focuses on human-computer interaction design in the field of smart cars, while the Digital Experience Laboratory is more oriented to innovative research in the field of smart cars.

The development direction of the digital laboratory mainly includes six aspects: high-precision 3D map rendering, intelligent cockpit 3D HMI, simulation software and hardware environment, digital city and driving simulation, VR and other in-vehicle entertainment experiences, VR simulation cockpit interaction, etc.

KOTEI can automatically generate road networks based on maps, with fast construction speed, and naturally has lane trajectory data, which can realize large-scale traffic simulation. In addition, KOTEI has already begun to lay out the research of plane interaction. In the VR environment, virtual interaction in the entire cockpit can be realized through the virtual screen of the car computer.

Kotei also has a rapid prototyping solution for VR virtual cockpits, and its development of VR entertainment and experience applications for the automotive field is also relatively mature. In the field of autonomous driving, through VR high-precision positioning and driving, the physical movement of the car can drive the movement of VR, and a virtual road environment around the car can be built in VR, and virtual and real scenes can be synchronized. Of course, current VR devices are not built for in-car environments. To really use VR devices in cars, some customized functions need to be made for the car environment, but these experiences are indeed valid.

Previous article:Automotive HUD Research: AR-HUD is intensively installed in vehicles, with local suppliers taking the lead

Next article:Keyless, buttonless, multi-mode interaction: HMI design and media evolution for seamless experience

- Popular Resources

- Popular amplifiers

- Car key in the left hand, liveness detection radar in the right hand, UWB is imperative for cars!

- After a decade of rapid development, domestic CIS has entered the market

- Aegis Dagger Battery + Thor EM-i Super Hybrid, Geely New Energy has thrown out two "king bombs"

- A brief discussion on functional safety - fault, error, and failure

- In the smart car 2.0 cycle, these core industry chains are facing major opportunities!

- The United States and Japan are developing new batteries. CATL faces challenges? How should China's new energy battery industry respond?

- Murata launches high-precision 6-axis inertial sensor for automobiles

- Ford patents pre-charge alarm to help save costs and respond to emergencies

- New real-time microcontroller system from Texas Instruments enables smarter processing in automotive and industrial applications

- LED chemical incompatibility test to see which chemicals LEDs can be used with

- Application of ARM9 hardware coprocessor on WinCE embedded motherboard

- What are the key points for selecting rotor flowmeter?

- LM317 high power charger circuit

- A brief analysis of Embest's application and development of embedded medical devices

- Single-phase RC protection circuit

- stm32 PVD programmable voltage monitor

- Introduction and measurement of edge trigger and level trigger of 51 single chip microcomputer

- Improved design of Linux system software shell protection technology

- What to do if the ABB robot protection device stops

- Allegro MicroSystems Introduces Advanced Magnetic and Inductive Position Sensing Solutions at Electronica 2024

- Car key in the left hand, liveness detection radar in the right hand, UWB is imperative for cars!

- After a decade of rapid development, domestic CIS has entered the market

- Aegis Dagger Battery + Thor EM-i Super Hybrid, Geely New Energy has thrown out two "king bombs"

- A brief discussion on functional safety - fault, error, and failure

- In the smart car 2.0 cycle, these core industry chains are facing major opportunities!

- The United States and Japan are developing new batteries. CATL faces challenges? How should China's new energy battery industry respond?

- Murata launches high-precision 6-axis inertial sensor for automobiles

- Ford patents pre-charge alarm to help save costs and respond to emergencies

- New real-time microcontroller system from Texas Instruments enables smarter processing in automotive and industrial applications

- AI cooling requires a "core" under the scorching sun. Infineon tests your knowledge of AI chips

- STLM75 evaluation board STEVAL-MKI204V1K data

- TMS320F28335——SPI usage notes

- Tutorial on generating negative voltage using MCU's PWM

- Reading Notes on the Good Book "Electronic Engineer Self-study Handbook" 04--LED Dot Matrix and LCD

- I've been playing around with Micropython recently and I'm interested in how it runs on a microcontroller.

- program

- About the submission instructions of Pingtouge RVB2601

- MSP430F5529 Getting Started Example 1

- [Erha Image Recognition Artificial Intelligence Vision Sensor] 3. Color Recognition and Serial Communication Initial Test

5962-9151901M2X

5962-9151901M2X

京公网安备 11010802033920号

京公网安备 11010802033920号