With the development of bus passenger transport, the safety and management of operators in the operation process are becoming more and more important. Due to the frequent disputes between passengers and operators due to fare evasion, service attitude and other issues, the vague identification of the responsibilities of both parties in traffic accidents during passenger transport, the lack of strong evidence for theft in the car, the theft of vehicles and the drunk driving of drivers, this paper proposes a 3G wireless vehicle video monitoring system based on ARM Linux, which can effectively reduce the occurrence of the above incidents and provide safety protection for passengers and vehicles. This system uses the embedded development board S3C2440 to build the hardware environment under the Linux operating system, and collects real-time video and location information in the car through the USB camera and GPS module. The video data can be compressed and encoded with high efficiency of H. 264, which can obtain high compression ratio and high-quality and smooth images, which can greatly reduce the amount of data to be transmitted. In addition, the 3G wireless network is used as the transmission medium, which can solve the problem that the traditional wired network cannot realize the monitoring in motion, provide a more flexible networking mode, meet the monitoring needs of monitoring points where lines cannot be set up, and provide a wider data transmission bandwidth than GPRS and CDMA to meet the requirements of mobile video monitoring. Finally, the monitoring terminal can monitor the video images inside the vehicle and the location and speed of the vehicle in real time through the Web browser.

1 Overall design of the system

The 3G wireless video monitoring system mainly consists of three parts: vehicle-mounted mobile monitoring terminal, network transmission, and remote client. The overall structure of the system is shown in Figure 1. The entire video monitoring system adopts a B/S structure. The vehicle-mounted mobile monitoring terminal mainly includes GPS data acquisition and video acquisition, video encoding, embedded Web server, and streaming media server running on the S3C2440 platform. The network transmission is built on the basis of TCP/IP protocol, and realizes transmission protocols such as RTP, RTCP, HTTP, TCP, and UDP. The remote client realizes the functions of receiving vehicle location and video data, decoding and playing video streams, and feedback on network status. The working principle of the system is: obtain the real-time vehicle situation and driver driving status through the USB camera, obtain GPS data (latitude and longitude, altitude, time, speed) through the GPS positioning module, and transmit it to the ARM processor, and the processor performs H. 264 encoding and transmitting the encoded NAL unit to the streaming media server. The streaming media server encapsulates the compressed data into RTP and sends it to the built-in boa server through the internal bus, and controls the H. 264 encoder and video acquisition rate according to the control information fed back by RTCP. The Boa small embedded server combines CGI technology to realize the interaction between the monitoring end and the client. The remote client is connected to the boa server through a wireless network, and uses ActiveX technology to receive video data, decapsulate RTP messages, send RTCP feedback information, decode H. 264 videos, and display them in a Web browser.

2 Hardware Composition and Selection Design of the System

2.1 Vehicle-mounted Mobile Terminal

The composition of the vehicle-mounted mobile terminal is shown in Figure 2. It mainly consists of four parts: ARM processor, GPS module, 3G wireless data transmission module, and image acquisition module. The main control processor chip uses the 16/32-bit RISC microprocessor S3C2440A launched by Samsung. S3C2440A uses the ARM920t core, 0.13μm CMOS standard macro unit and memory unit, and the highest operating frequency reaches 400MHz. Its low power consumption, simplicity, elegance and full static are specially designed for applications that need to consider cost and power consumption, and are particularly suitable for this system. S3C2440A provides rich on-chip resources and supports Linux. The functions integrated on the chip include: 16 KB instruction and data cache, LCD controller, AC97 audio interface, camera interface, DMA controller, PWM timer, MMC interface, etc. It can complete the scheduling of the entire system, configure the function registers of all chips that need to work when the system is powered on, complete the encoding of the video stream, and send the video code stream to the monitoring terminal through the 3G wireless network.

The GPS module uses LEA-5H from u_blox. LEA-5H has a 50-channel u-blox engine, more than one million effective correlators, a hot start and auxiliary start first positioning time of less than 1 s, a SuperSense capture and tracking sensitivity of -160 dBm, and an operating limit speed of 515 ms. The system receives positioning information in real time through this module to ensure that the central control module can process positioning data in real time.

The hardware resources of the image acquisition module use the Vimicro camera with a USB interface. This camera is low-cost and has good imaging effect, reflecting a good cost-effectiveness. At the same time. The mainboard also reserves multiple peripheral interfaces for functional expansion. The

wireless communication module uses the SIM5218 module from SIMCOM. The wireless SIM5218 is a WCDMA/HSDPA/GSM/GPRS/EDGE module solution that supports data transmission services with a downlink rate of 7.2 Mb/s and an uplink rate of 5.76 Mb/s. This is an ideal choice for signal transmission with relatively large data volumes such as video and images. At the same time, it also has a variety of interfaces including UART, USB 2.0, GPIO, I2C, GPIO, GPS, camera sensor and built-in SIM card. In terms of cost, 3G uses packet switching technology, so the cost of network use is determined by the consumer's data transmission volume. [page]

2.2 Monitoring Center

The vehicle monitoring center consists of hardware such as display screen, monitoring server, main control workbench, router and storage. According to the function, its basic functional modules include data receiving module, decoding module, display module and storage module. Since the hardware of the monitoring center can use general devices, there is no need for special selection and design.

3 System software implementation

The system selects the Linux operating system. Linux has the characteristics of open source code, low cost, strong kernel customization, and integrated TCP/IP protocol. Its support for the network is an advantage over other operating systems. The software design of the video monitoring terminal based on ARM Linux mainly completes three aspects of work: first, build a software platform on the hardware. Building an embedded Linux software development platform requires completing UBOOT transplantation, embedded Linux operating system kernel transplantation, and embedded Linux operating system device driver development; second, based on the software platform, with the help of cross-compilation tools, develop the acquisition, compression, and streaming media server programs running on the video monitoring terminal; third, the receiving, decompression, and display programs running in the monitoring center.

3.1 Construction of the Linux platform of S3C2440A

To build an embedded Linux development platform, you need to build a cross-compilation environment first. A complete cross-compilation environment includes a host and a target machine. In the development, the host is a PC with Centos 5.5 operating system, and the target machine is a video surveillance terminal based on S3C2440A. The selected embedded Linux kernel source code package version number is 2.6.28, and the cross compiler is GCC 4.3.2. Before compiling the kernel, you must first configure the kernel and cut out redundant functional modules. The specific steps are as follows:

(1) Use the command make menuconfig to configure the kernel, select the YAFFS file system, support NFS boot, and enable the USB device support module, including the USB device file support module and the USB host controller driver module. In addition, the USB camera is a video device, and the Video4Linux module needs to be enabled;

(2) Use the make dep command to generate the dependencies between kernel programs;

(3) Use the make zlmage command to generate the kernel image file;

(4) Use the make modules and make modules_install commands to generate the system loadable modules. In this way, the zlmage kernel image file is generated and downloaded to the FLASH of the target platform.

This design uses a USB external camera, which requires dynamic loading in the form of a module during kernel configuration. First, we need to complete the implementation of the basic I/O operation interface functions open, read, write, close, interrupt processing, memory mapping function, and I/O channel control interface function ioctl, etc. in the driver, and define them in struct file_operations. Then compile the USB driver into a module that can be dynamically loaded.

3.2 Vehicle-mounted mobile terminal software design

3.2.1 GPS module program design

The output data format of the GPS receiver GPS15L complies with the NMEA-0183 standard. The NMEA-0183 protocol is a serial communication data protocol developed by the National Marine Electronics Association of the United States. All input and output information is a line of ASCII characters. Its message is called a sentence. Each sentence starts with "$" and ends with a carriage return line feed (

As shown in Figure 3, after the GPS module is started, the serial port is initialized first, that is, the GPS module is initialized, including setting the baud rate, data bit, check bit, etc.; then it starts to receive GPS data, that is, read data from the serial port and save the read data into BUF, then enters the data parsing and extraction stage, judges whether BUF[5] is equal to "c" to judge whether it is MYMGPR MC, if so, starts to extract longitude and latitude, time and other information and stores them in the structure GPS_DATA.

3.2.2 Design and implementation of video acquisition module

The video acquisition module completes video acquisition by scheduling V4L (Video4Linux) and image device driver through the embedded Linux operating system. V4L is the basis of Linux image system and embedded image, and is a set of APIs in the Linux kernel that support image devices. In the Linux operating system, external devices are managed as device files, so that the operation of external devices is transformed into the operation of device files. Its acquisition process is shown in Figure 4.

[page]

The main process is as follows:(1) Open the video device. Call the function int open(constchar * pathname, int flags). If the return value is -1, it means that the opening failed. Otherwise, it indicates the file descriptor of the opened device.

(2) Read device information. The ioctl(cam_fp, VIDIOC_QUERYCAP, &cap) function is used to obtain the attribute parameters of the device file and store them in the cap structure, where cam_fp refers to the opened video device file descriptor.

(3) Select the video input method. Set the input method of the video device through the ioetl(cam_fp, VIDIOC_S_INPUT, &chan) function, where the data structure type of chan is V4L2 _input, which is used to specify the video input method.

(4) Set the video frame format. Set the video frame format through the ioctl(cam_fp, VIDIOC_S_FMT, &fmt) function, where the data structure type of fmt is V4L2_format, which is used to specify the width, height, pixel size, etc. of the video.

(5) Read video data. Through the read(cam_fp, g_yuv, YUV_SIZE) function, the data of one frame of the camera is stored in g_yuv, where YUV_SIZE refers to the size of each frame of data.

(6) Close the video device. The close(cam_fp) function is used to close the video device. According to the flowchart, corresponding operations can be performed. When the camera is connected through the USB interface, the program calls the V4L API to read the device file read() to complete the video data acquisition into the memory. The video data can be saved in the form of Figure 4 or compressed and encapsulated into a data packet. In this paper, the collected data is first compressed by H. 264 and then encapsulated into a data packet, and then transmitted to the monitoring PC for processing.

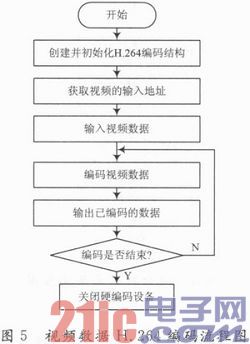

3.2.3 Design of video compression module

Because the amount of video data collected by the video acquisition module is very large, in order to improve the data transmission speed, reduce the network data flow, and ensure the real-time monitoring, it is necessary to compress and encode the data collected by the vehicle camera. In this paper, H. 264 hard encoding method (hard encoding has the advantages of not occupying CPU resources and fast operation speed, thus meeting the real-time requirements of video data) compresses and encodes the image series collected by the camera into streaming media. The specific encoding process is shown in Figure 5.

(1) Create H. 264 encoding structure. Call SsbSipH264Encodehlit(width, height, frame_rate, bitrate, gop_num) function to implement, where width represents the width of the image, height represents the height of the image, frame_rate represents the frame rate, bitrate represents the bit rate or bit rate, and gop_num represents the maximum number of frames (B or P frames) between two key frames.

(2) Initialize H. 264 encoding structure and call SsbSipH264Encode Exe(handle) function.

(3) Get the video input address and call SsbSipH264EncodeGetInBuf(handle, 0) function to implement. This function returns the first address of the video input and stores it in p_inbuf.

(4) Input video data and call memcpy(p_inbuf, yuv_bur, frame_size) function to implement. p_inbuf stores the data to be encoded, yuv_buf stores the original video data, and frame_size indicates the size of the data.

(5) Encode the video data, perform H.264 encoding on the content of p_inbuf, and call the SsbSipH264EncodeExe(handle) function to implement it.

(6) Output the encoded data, call SsbSipH264EncodeGetOutBuf(handle, size), which returns the first address of the encoded image, and size indicates the size of the encoded image.

(7) Close the hard-coded device, and call the SsbsipH264Encode DeInit(handle) function to implement it.

3.2.4 Embedded server boa transplantation

Linux supports several Web servers such as boa, HTTPD, and THTTPD. Among them, the boa Web server is more suitable for embedded systems because it is a single-task Web server that supports CGI (Common Gateway Interface) functions. It can only complete user requests in sequence, and will not fork a new process to process and issue connection requests. The executable code is only about 60K.

First enter the src subdirectory of the boa source code directory and execute the command. /con-figure generates a Maketile file, then modifies the Makefile file to find CC=gcc, change it to CC=arm-linux-gcc, and then change CPP=gcc-E to CPP=arm-linux-gcc-E, save and exit. Then run make to compile, and the executable program obtained is boa. Then execute the command arm-linux-strip boa to remove the debugging information and get the final program. The second step is to complete the configuration of boa so that it can support the execution of CGI programs. Boa needs to create a boa directory under the /etc directory, and put the main configuration file of boa, boa.conf, in it. The meanings of the main files are: AccessLog/var/log/boa/access_log access log file. If it does not start with /, it means starting from the root path of the server; VerboseCGILogs Whether to record CGI running information, if it is not commented out, it will be recorded, otherwise it will not be recorded; Document Root / var / www The main directory of the HTML document, if it does not start with /, it means starting from the root path of the server; DirectoryMaker / usr / lib / boa / boa_indexer When there is no index file in the HTML directory, when the user only indicates the access directory, boa will call this program to generate index files for the user. Because this process is slow, it is best not to execute it, and it can be commented out; Scri ptAlia / cgi-bin / / var / www / cgi-bin / indicates the actual path corresponding to the virtual path of the CGI script. Generally, all CGI scripts should be placed in the actual path, and users enter the site + virtual path + CGI script name when accessing and executing. When modifying boa.conf, it is necessary to ensure that other auxiliary files and settings must be consistent with the configuration in boa.conf, otherwise boa will not work properly. In addition, you need to create a directory /var/log/boa for log files, create a main directory /var/www for HTML documents, copy mime.types files to the /etc directory, and create a directory /var/www/cgi-bin/ for CGI scripts.

3.2.5 Design of CGI Program

CGI provides an external program channel for a Web server, runs on the server, is triggered by browser input, and is a connection channel between the Web server and other programs in the system. CGI programs are programs that conform to this interface. The server receives the user's request and sends the data to the CGI program. After receiving the data, the CGI program starts the written application and executes it according to the data provided by the user. After the application is executed, the execution result is returned and sent to the user's browser through the Web server for display.

The design of the CGI module program mainly includes the following parts: Web server configuration, HTML page writing, and CGI script implementation.

(1) Web server configuration

The embedded Web server uses boa, and its configuration is provided in text form and placed in the /etc/httpd/conf/ directory in the file system. Its main configuration has been described in the above boa server transplantation.

(2) HTML page writing

Because the main direction of the system design is to be able to monitor in real time through a USB camera. So in order to simplify, some unnecessary complex options are discarded. The main HTML pages are the login interface, registration interface and monitoring interface. These HTML are placed in the /var/www directory of the embedded file system.

(3) CGI script implementation

The second step to realize dynamic Web pages is to write CGI programs in C. CGI programs are divided into the following parts: receiving data from the submitted form according to the POST method or GET method; decoding URL encoding; using the printf() function to generate HTML source code, and correctly returning the decoded data to the browser.

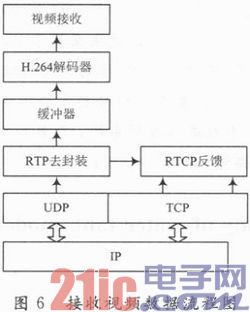

3.2.6 Design of remote video data receiving module

Currently popular browsers include IE, FireFox, Chrome, etc., which can easily display ordinary text and GIF, JPEG and other format images supported by HTML files, but these ordinary browsers cannot display real-time video data normally. Therefore, the main tasks of the real-time video data receiving module are receiving video data, decapsulating RTP messages, sending RTcP feedback information, H. 264 video decoding and displaying in Web browsers.

This system uses ActiveX control technology, embedded in the Web page, to complete the video data reception, RTP protocol, decoding and final display. Most importantly, the system uses double buffering technology to meet the problem of video decoding speed and achieve real-time playback. The specific flow chart is shown in Figure 6.

4 System Testing and Conclusion

This paper designs an embedded video surveillance system based on ARM S3C2440. First, the USB acquisition of the Visionox camera is used as a server to realize data acquisition of the USB camera using V4L2 technology, and the data is sent to the H. 264 encoding module for data compression. The compressed data is packaged by the video server RTP encapsulation, and finally interacts with the remote video surveillance client through the embedded Web server. This system has been tested in the actual operation network. The target positioning information receiving rate is 1 time/s, and the dynamic positioning accuracy is less than or equal to 10 m; it can realize dynamic video transmission, and the video transmission is smooth. The maximum image resolution can reach VGA (640×480 pixels). The quality of video transmission is better than that of the vehicle monitoring system based on 2.5G network (the maximum image resolution is 320×240 pixels, and basically only static images can be transmitted).

Previous article:Power Quality Monitor Based on Embedded Win CE System

Next article:Embedded System Based on Dual-core OMAP5910 and Its Application

Recommended ReadingLatest update time:2024-11-16 14:40

- Popular Resources

- Popular amplifiers

Professor at Beihang University, dedicated to promoting microcontrollers and embedded systems for over 20 years.

Professor at Beihang University, dedicated to promoting microcontrollers and embedded systems for over 20 years.

- Innolux's intelligent steer-by-wire solution makes cars smarter and safer

- 8051 MCU - Parity Check

- How to efficiently balance the sensitivity of tactile sensing interfaces

- What should I do if the servo motor shakes? What causes the servo motor to shake quickly?

- 【Brushless Motor】Analysis of three-phase BLDC motor and sharing of two popular development boards

- Midea Industrial Technology's subsidiaries Clou Electronics and Hekang New Energy jointly appeared at the Munich Battery Energy Storage Exhibition and Solar Energy Exhibition

- Guoxin Sichen | Application of ferroelectric memory PB85RS2MC in power battery management, with a capacity of 2M

- Analysis of common faults of frequency converter

- In a head-on competition with Qualcomm, what kind of cockpit products has Intel come up with?

- Dalian Rongke's all-vanadium liquid flow battery energy storage equipment industrialization project has entered the sprint stage before production

- Allegro MicroSystems Introduces Advanced Magnetic and Inductive Position Sensing Solutions at Electronica 2024

- Car key in the left hand, liveness detection radar in the right hand, UWB is imperative for cars!

- After a decade of rapid development, domestic CIS has entered the market

- Aegis Dagger Battery + Thor EM-i Super Hybrid, Geely New Energy has thrown out two "king bombs"

- A brief discussion on functional safety - fault, error, and failure

- In the smart car 2.0 cycle, these core industry chains are facing major opportunities!

- The United States and Japan are developing new batteries. CATL faces challenges? How should China's new energy battery industry respond?

- Murata launches high-precision 6-axis inertial sensor for automobiles

- Ford patents pre-charge alarm to help save costs and respond to emergencies

- New real-time microcontroller system from Texas Instruments enables smarter processing in automotive and industrial applications

- Purgatory Legend-Battle of Doubler Circuits

- Bluetooth remote control serial port problem

- [Serial] [Starlight Lightning STM32F407 Development Board] Chapter 13 FLASH Data Storage Experiment

- DSP28335 serial port interruption problem

- How to make an echo on atmel/AVR microcontroller Atmega 128? Is there any relevant information?

- Download to get a gift | Collection of the best courseware: Tektronix Semiconductor Device Research Exchange and Keithley Test and Measurement Annual Seminar

- MSP430 Main Memory Bootloader Introduction

- I want to use POE to power some gadgets, but I don’t know what power chip to use?

- STM8L152 drives LCD experimental ultra-low power consumption code 7.2UA

- 5G and IoT will be the great equalizer

Network Operating System (Edited by Li Zhixi)

Network Operating System (Edited by Li Zhixi) usb_host_device_code

usb_host_device_code

京公网安备 11010802033920号

京公网安备 11010802033920号