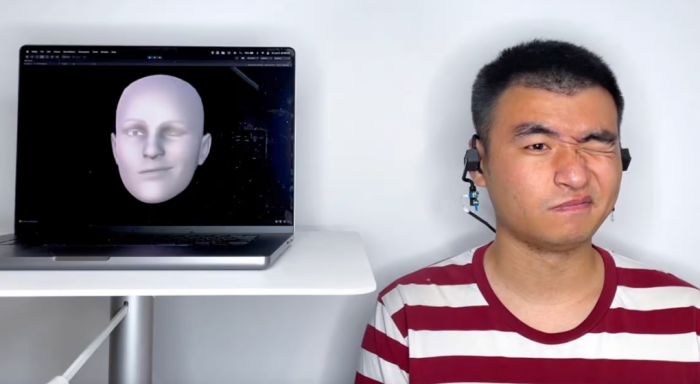

Cornell engineers have developed a new wearable device that can monitor a person's facial expressions through sonar and recreate them in a digital avatar. Removing the camera from the equation could ease privacy concerns.

The device, which the team calls EarIO, consists of an earpiece with a microphone and speakers on either side that can be connected to any regular headset. Each speaker emits a sound pulse beyond the range of human hearing toward the wearer's face, and the echo is picked up by the microphone.

As the user makes various facial expressions or speaks, the echo profile will change slightly due to the way the user's skin moves, stretches and wrinkles. A specially trained algorithm can recognize these echo profiles and quickly reconstruct the expressions on the user's face and display them on the digital avatar.

"Through the power of artificial intelligence, the algorithm discovered complex connections between muscle movements and facial expressions that are invisible to the human eye," said study co-author Li Ke. "We can use this to infer complex information that is even harder to capture -- the entire frontal face."

The team tested the EarIO system on 16 participants, running the algorithm on a regular smartphone. Sure enough, the device was able to reconstruct facial expressions just as well as a regular camera. Background noise, such as wind, talking or street noise, did not interfere with its ability to recognize faces.

The team says sonar has some advantages over using cameras. Acoustic data requires much less energy and processing power, which also means the equipment can be smaller and lighter. Cameras can also capture a lot of other personal information that users may not intend to share, so sonar could be more private.

As for what this technology might be used for, it could be a handy way to replicate your physical facial expressions on a digital avatar in games, VR, or virtual worlds.

The team said further work is still needed to tune out other distractions, such as when the user turns their head, and to simplify the system for training the AI algorithms.

The research was published in the Association for Computing Machinery's Journal on Interactive, Mobile, Wearable and Ubiquitous Technologies.

Previous article:Apple is developing an AR headset, and Cook almost admitted it

Next article:Apple AR/MR devices are expected to replace iPhones in the next 10 years, and the price can reach 17,000 yuan

- Popular Resources

- Popular amplifiers

- e-Network Community and NXP launch Smart Space Building Automation Challenge

- The Internet of Things helps electric vehicle charging facilities move into the future

- Nordic Semiconductor Launches nRF54L15, nRF54L10 and nRF54L05 Next Generation Wireless SoCs

- Face detection based on camera capture video in OPENCV - Mir NXP i.MX93 development board

- The UK tests drones equipped with nervous systems: no need to frequently land for inspection

- The power of ultra-wideband: reshaping the automotive, mobile and industrial IoT experience

- STMicroelectronics launches highly adaptable and easy-to-connect dual-radio IoT module for metering and asset tracking applications

- This year, the number of IoT connections in my country is expected to exceed 3 billion

- Infineon Technologies SECORA™ Pay Bio Enhances Convenience and Trust in Contactless Biometric Payments

- LED chemical incompatibility test to see which chemicals LEDs can be used with

- Application of ARM9 hardware coprocessor on WinCE embedded motherboard

- What are the key points for selecting rotor flowmeter?

- LM317 high power charger circuit

- A brief analysis of Embest's application and development of embedded medical devices

- Single-phase RC protection circuit

- stm32 PVD programmable voltage monitor

- Introduction and measurement of edge trigger and level trigger of 51 single chip microcomputer

- Improved design of Linux system software shell protection technology

- What to do if the ABB robot protection device stops

- Analysis of the application of several common contact parts in high-voltage connectors of new energy vehicles

- Wiring harness durability test and contact voltage drop test method

- From probes to power supplies, Tektronix is leading the way in comprehensive innovation in power electronics testing

- From probes to power supplies, Tektronix is leading the way in comprehensive innovation in power electronics testing

- Sn-doped CuO nanostructure-based ethanol gas sensor for real-time drunk driving detection in vehicles

- Design considerations for automotive battery wiring harness

- Do you know all the various motors commonly used in automotive electronics?

- What are the functions of the Internet of Vehicles? What are the uses and benefits of the Internet of Vehicles?

- Power Inverter - A critical safety system for electric vehicles

- Analysis of the information security mechanism of AUTOSAR, the automotive embedded software framework

- [TI recommended course] #Industry's first professional RGB LED driver LP50xx demonstration #

- How to configure the background of Hikvision Ai equipment and thermal imaging equipment? What is the difference between them and ordinary network cameras? Please ask the experts! !

- How to get a multi-channel reference power supply

- TMS320F28335GPIO Example - Light up the LED

- Analysis of 2020 E-sports Contest C: The realization process of the 99-point ramp car

- MSP430F4152 development board schematic diagram

- ON Semiconductor - FOD83xx/T series is coming! Answer the questions to win prizes and apply for free samples

- #Power supply roadblock#Experience sharing summary

- Programming example of msp430 keypress

- Are there any recommended books for beginners in circuit development?

EL5420CS-T13

EL5420CS-T13

京公网安备 11010802033920号

京公网安备 11010802033920号