When the Hypertext Transfer Protocol (HTTP) was invented, no one could have anticipated the emergence of the modern Web 2.0 infrastructure with modern browser capabilities. In modern life, we "surf the web" several times a day. We communicate through web tools such as Facebook, MySpace and Flickr, not forgetting regular email. We can shop online with peace of mind thanks to security extensions to the original protocol, now called HTTPS (the "secure" part has been added). At the same time, there are machine-to-machine (M2M) applications running in the background, completing tasks such as database updates and weather data collection.

All of these systems are based on the server-client model. That is, there is a client (such as a browser) and a server that provides content or collects information. Initially, the server is assigned an IP address (or several) and the server software then serves the content to the client. When a new "website" is created, the server software has the resources allocated and dedicated storage space. Generally, this model works well when the load is stable (i.e., it does not fluctuate much). For example, if I know that a server can serve 10 million web pages per second (assuming the communication bandwidth is available), and the other party also knows the maximum "page hit rate" for each hosted website, then he can calculate the load on the server in order to maintain peak performance for customers (hosting customers and users).

The Impact of Web 2.0

This works pretty well when all the content on the site is static (i.e. the content rarely changes). An online dictionary with only word lookup functionality (no videos, music, etc.) is a good example. In this case it is easy to calculate the load. Statistically, not everyone in the world will want to look up the definition of the word "Stochastic" at the same time... You might think the load would vary by time of day, but globally someone is always looking for it.

The way the Web protocols work is that you open a session with the server, receive the content, and then terminate the session, freeing up resources on the server for other tasks. Now all the "content" is in the browser. So when you look up a word, the definition and any graphics are sent back to you, and you can read them at your own pace. The server moves on to other things.

But that's no longer the case. What happens when you download a music video? The server no longer just serves up a web page and moves on. It's now working to transfer that 40 megabyte file to your machine. Add an embedded player to the web page, and the server will stream the video to the client in real time. In this case, the load can still be monitored statistically, and the site can be modified. As popularity or demand increases, the site can be moved to a dedicated server that handles only a single domain.

That was the case with Web 1.0. The problem with Web 2.0 today is that much of what we do is done on the server side. For example, Google Docs is a complete document editing and archiving system that sits on the server side. It uses the computer's browser as a user interface tool, but rarely uses client resources. Now, as people start to use the web, more of the work is done in the background. The increasing interaction between the server and the client will cause the server load to fluctuate wildly and degrade performance unless steps are taken to ensure adequate resources.

Seeking a solution

In the past, an inefficient way to prevent websites from crashing was to load a domain with the highest statistically loaded resources. Most of the time, these servers might only be loaded at 40-60%, but at peak times they would be 100%, and the website would continue to work effectively. People soon realized that servers were not maxed out most of the time. They were just working part of the time until the peak load came - and the time of this peak was not always known. For example, on any given day, a news site might have normal traffic. When a breaking news event occurs, if everyone is online to check for photos or videos related to it, it may cause the site to crash.

The best solution is to "virtualize" the server - that is, to create software that looks like a dedicated server, but can dynamically shift to more resources in the process if needed. When the high load disappears, the software can make the server "slim" by consolidating more websites onto a single machine (a blade in modern servers). Other unused blades can be put into standby mode, greatly reducing the center's power consumption. With this new approach, not only can the server's power consumption be reduced, but the HVAC costs for cooling can also be reduced, thereby reducing the energy costs of the server room.

Impact on the server

This is a major step towards the "greening" of data centers and server farms. Energy consumption is reduced, but often software also affects hardware (and vice versa). What impact does load shedding have on system hardware and the surrounding infrastructure?

The first thing to look at is the power supply for the blade servers. Typically, there are two redundant power supplies in a blade server that convert the commercial power to a DC bus. The bus runs along the length of the backplane (where all the blades plug into), and each blade has its own power regulator to provide the correct voltage and current. In larger systems, the DC bus can run along the height of the rack to serve multiple blades stacked on top of other systems.

When designing a power supply, a specification is needed that is known as a target load. This tells the designer where to place the highest efficiency conversion when selecting components. The design equations provide the component values at which the system will operate most efficiently. This is a fixed point, so increasing or decreasing the load (in most cases decreasing the load) will change the efficiency curve. If the peak efficiency at the target load is 92%, then reducing the load to 25% of the target requirement may cause the efficiency to drop to 75%.

Power supply designers suddenly face a new challenge: providing efficient power over a wide load range. Modern switching power supplies use high-power FET transistors to "turn" the power on and off, using pulse width modulation (among other methods). The output of each of these techniques presents a complex waveform whose average value is the new lower voltage. A high-power filter made of inductors and capacitors smooths the output waveform while providing a clean DC voltage. The output is monitored by a controller, and the switching of the FETs is varied to maintain a stable output as the load and input vary. [page]

FETs, inductors, and capacitors are all chosen to meet the load specifications, and once they are fixed in the circuit, their values cannot be changed dynamically. Therefore, if the load drops below the design specification, energy is lost due to the losses in these components. One solution is to build a multiphase converter. In high current power supplies (such as those in the motherboard that provide the core voltage to the processor in a personal computer), it is very common to set up 3 or 4 power supplies working in conjunction - each supply takes a turn to supply power to the load.

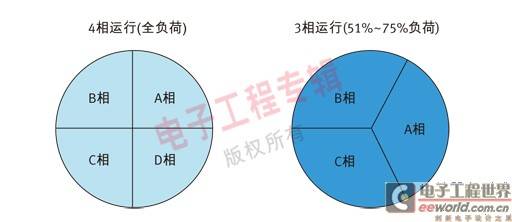

The advantage of this topology is that when the load decreases, some phases can be shut down and the remaining phases are expanded to replace the missing phases (see Figure 1). This increases the complexity of the power supply, which is used to ensure that its output does not change during the transition period when the phase is added or dropped. All power converters operate near peak efficiency or are shut down. Applying this approach to large DC bus power supplies allows blade servers to operate efficiently over a wide load range. However, to handle these dynamic loads, power supplies are becoming more complex.

Figure 1 - Phase shedding with load variation

Impact on infrastructure

As with power, the communications infrastructure that carries the information is also affected. Each blade server communicates via one or more Gigabit Ethernet connections to a switch. The PHYs in both the server and the switch consume many watts of power, and that can add up quickly. If a blade server is placed in standby, the PHY is usually not turned off—the link is still maintained, but the conversation is stopped. In most cases, this does not significantly reduce the power consumed by the PHY because it still needs to maintain the link. Even if the PHY on the server side is turned off, the PHY on the switch side must continue to be powered on to monitor link activity—which again results in energy consumption.

There are a number of approaches being taken to address this problem. Physical layer devices (PHYs) that can be put into a standby or intentionally low-power state when a link is lost will reduce energy consumption. The Institute of Electrical and Electronics Engineers (IEEE) has a working group called the 802.3az Task Group. Its goal is to develop protocols for new physical layer devices (PHYs) that can reduce power consumption and keep links active when usage is low.

Another approach is to simply limit the semiconductor process itself. CMOS process power consumption scales linearly with frequency and exponentially with supply voltage (see Equation 1).

Equation 1 – Energy consumption of CMOS

In the past, techniques such as dynamic voltage scaling in PC processors were used to reduce these losses. Today, a more modern technique is called adaptive voltage scaling, or AVS, pioneered by National Semiconductor in physical layer devices such as the 10G base-T Teranetics TN2022. Basically, AVS technology continuously monitors the performance of the device's internal processes and automatically adjusts by adjusting the power supply voltage. Compared to a fixed supply voltage, this technology can save 20% to 50% of energy. In addition, it can compensate for temperature and process changes (aging) during operation. This technology, combined with other technologies, can greatly reduce the energy consumption of infrastructure applications and automatically adapt to load changes caused by servers joining or leaving the network.

in conclusion

So what else could happen? A flood of netbooks with little processing power on their own is pushing more resources back to the server. Soon, very little software or disk storage may be stored on these computers - most files will be stored "virtually". All the traditional software tools for creating and sharing documents or presentations will be placed on the server.

In addition, virtual games are on the rise. Most gaming computers require extremely high-performance computing to provide the realistic scenes depicted in these games. This may be transferred to the server side, sending only the real-time video stream to the user-side computer. This may enable low-performance netbooks and other computing devices (including handheld mobile terminals such as iPhones, etc.) to play high-performance games.

Humans are mobile, and the evolution of mobile terminals will return more resource demands to data centers and infrastructure. Because network activity fluctuates over a wide range, virtualization will continue to achieve energy savings, while hardware will need to find new ways to adapt to changing loads.

Previous article:Five functions of MEMS accelerometers simplify user design

Next article:Infineon Technologies showcases ORIGA™ authentication chip at IDF 2009

Recommended ReadingLatest update time:2024-11-16 16:45

- Popular Resources

- Popular amplifiers

- Apple faces class action lawsuit from 40 million UK iCloud users, faces $27.6 billion in claims

- Apple and Samsung reportedly failed to develop ultra-thin high-density batteries, iPhone 17 Air and Galaxy S25 Slim phones became thicker

- Micron will appear at the 2024 CIIE, continue to deepen its presence in the Chinese market and lead sustainable development

- Qorvo: Innovative technologies lead the next generation of mobile industry

- BOE exclusively supplies Nubia and Red Magic flagship new products with a new generation of under-screen display technology, leading the industry into the era of true full-screen

- OPPO and Hong Kong Polytechnic University renew cooperation to upgrade innovation research center and expand new boundaries of AI imaging

- Gurman: Vision Pro will upgrade the chip, Apple is also considering launching glasses connected to the iPhone

- OnePlus 13 officially released: the first flagship of the new decade is "Super Pro in every aspect"

- Goodix Technology helps iQOO 13 create a new flagship experience for e-sports performance

- Innolux's intelligent steer-by-wire solution makes cars smarter and safer

- 8051 MCU - Parity Check

- How to efficiently balance the sensitivity of tactile sensing interfaces

- What should I do if the servo motor shakes? What causes the servo motor to shake quickly?

- 【Brushless Motor】Analysis of three-phase BLDC motor and sharing of two popular development boards

- Midea Industrial Technology's subsidiaries Clou Electronics and Hekang New Energy jointly appeared at the Munich Battery Energy Storage Exhibition and Solar Energy Exhibition

- Guoxin Sichen | Application of ferroelectric memory PB85RS2MC in power battery management, with a capacity of 2M

- Analysis of common faults of frequency converter

- In a head-on competition with Qualcomm, what kind of cockpit products has Intel come up with?

- Dalian Rongke's all-vanadium liquid flow battery energy storage equipment industrialization project has entered the sprint stage before production

- Allegro MicroSystems Introduces Advanced Magnetic and Inductive Position Sensing Solutions at Electronica 2024

- Car key in the left hand, liveness detection radar in the right hand, UWB is imperative for cars!

- After a decade of rapid development, domestic CIS has entered the market

- Aegis Dagger Battery + Thor EM-i Super Hybrid, Geely New Energy has thrown out two "king bombs"

- A brief discussion on functional safety - fault, error, and failure

- In the smart car 2.0 cycle, these core industry chains are facing major opportunities!

- The United States and Japan are developing new batteries. CATL faces challenges? How should China's new energy battery industry respond?

- Murata launches high-precision 6-axis inertial sensor for automobiles

- Ford patents pre-charge alarm to help save costs and respond to emergencies

- New real-time microcontroller system from Texas Instruments enables smarter processing in automotive and industrial applications

- [BearPi-HM Nano, play with Hongmeng's "touch and pay" function] Part 3: Is the OLED screen lit up?

- Fluke always has an infrared thermal imager that suits your needs! Participate in the event to win gifts and start

- A summary of the most downloaded electronic technical materials this week (2020.5.23~29)

- Does any enthusiastic netizen have the manual of the following chip (JRL21)? It's strange, I can't find it on Baidu.

- Answers to eight classic questions in PCB design!

- Comparison between NFC, RFID, infrared and Bluetooth

- MSP430 Clock Analysis

- Feedback and Op Amp in Circuits

- Why are the photos of Ukraine taken by US satellites in color but China in black and white?

- Prize-giving event | Stand on NI's shoulders and explore the mysteries of data together!

Network Operating System (Edited by Li Zhixi)

Network Operating System (Edited by Li Zhixi) Homemade AI image search engine (Ming Hengyi)

Homemade AI image search engine (Ming Hengyi) Plant growth monitoring system source code based on Raspberry Pi 5

Plant growth monitoring system source code based on Raspberry Pi 5

京公网安备 11010802033920号

京公网安备 11010802033920号