Reduce speech transcription costs by up to 90% using interactive artificial intelligence (CAI)

Introduction to interactive artificial intelligence (CAI)

What is interactive artificial intelligence (AI)?

Conversational artificial intelligence (CAI) uses deep learning (DL), a subset of machine learning (ML), to automate speech recognition, natural language processing, and text-to-speech through machines. The CAI process is usually described by three key functional modules:

1. Speech-to-text (STT), also known as automatic speech recognition (ASR)

2 Natural Language Processing (NLP)

3 Text-to-speech (TTS) or speech synthesis

Figure 1: Interactive AI building blocks

This white paper details the use cases for Automatic Speech Recognition (ASR) and how Achronix can reduce the associated costs by up to 90% while implementing ASR solutions.

Market segments and application scenarios

With more than 110 million virtual assistants in use in the United States alone[1], most people are familiar with using CAI services. Key examples include voice assistants on mobile devices, such as Apple’s Siri or Amazon’s Alexa; voice search assistants on laptops, such as Microsoft’s Cortana; automated call center answering assistants; and voice-enabled devices, such as smart speakers, televisions, and cars.

The deep learning algorithms that power these CAI services can be processed locally on electronic devices or aggregated in the cloud for remote, large-scale processing. Large-scale deployments that support millions of user interactions are a huge computational processing challenge, and hyperscale providers have developed specialized chips and devices to handle these services.

Today, most small businesses can easily add voice interfaces to their products using cloud APIs provided by companies such as Amazon, IBM, Microsoft, and Google. However, when the scale of these workloads increases (a specific example is described later in this whitepaper), the cost of using these cloud APIs becomes prohibitive, forcing businesses to seek other solutions. In addition, many enterprise operations have higher data security requirements, so solutions must remain within the enterprise's data security scope.

Enterprise-level CAI solutions can be used in the following application scenarios:

• Automated call center

• Voice and video communication platform

• Health and medical services

• Financial and banking services

• Retail and vending equipment

ASR processing in detail

ASR is the first step in the CAI process, where speech is transcribed into text. Once the text is available, it can be processed in a variety of ways using Natural Language Processing (NLP) algorithms. NLP includes key content identification, sentiment analysis, indexing, contextualizing content, and analytics. In an end-to-end interactive AI algorithm, speech synthesis is used to generate natural voice responses.

State-of-the-art ASR algorithms are implemented using end-to-end deep learning. Unlike convolutional neural networks (CNNs), recurrent neural networks (RNNs) are common in speech recognition. As David Petersson from TechTarget [10] points out in the article “CNN vs. RNN: How Are They Different?”, RNNs are better suited for processing temporal data and are well suited for ASR applications. RNN-based models require high computational power and memory bandwidth to process neural network models and meet the strict latency targets required for interactive systems. When real-time or automated responses are too slow, they appear sluggish and unnatural. Low latency is often achieved only at the expense of processing efficiency, which increases costs and can become too large for practical deployment.

Achronix has collaborated with Myrtle.ai, a professional technology company that uses field-programmable gate arrays (FPGAs) for AI reasoning. Myrtle.ai uses its MAU reasoning acceleration engine to implement high-performance RNN-based networks on FPGAs. The design has been integrated into the Achronix Speedster®7t AC7t1500 FPGA device, which can take advantage of the key architectural advantages of the Speedster7t architecture (which will be discussed later in this white paper) to greatly improve the accelerated processing of real-time ASR neural networks, thereby increasing the number of real-time data streams (RTS) that can be processed by 2500% compared to server-level central processing units (CPUs).

Data Accelerator: How to Achieve a Balanced Allocation of Resources

Data accelerators can significantly reduce server footprint by offloading compute, network, and/or storage processing workloads typically performed by the main CPU. This white paper describes replacing up to 25 servers with one server and an Achronix ASR-based accelerator card. This architecture significantly reduces workload cost, power consumption, and latency while increasing workload throughput. However, using data acceleration hardware to achieve high performance and low latency only makes sense if the hardware is used efficiently and deployment is cost-effective.

ASR models are challenging for modern data accelerators and often require manual tuning to achieve performance better than the single-digit efficiency of the platform's main performance specifications. Real-time ASR workloads require high memory bandwidth as well as high-performance computing. The data required for these large neural networks is typically stored in DDR memory on the accelerator card. Transferring data from external memory to the compute platform is a performance bottleneck in this workload, especially when deployed in real time.

Graphics Processing Unit (GPU) architecture is based on a data parallel model, and smaller batch sizes result in lower utilization of GPU acceleration hardware, leading to increased costs and reduced efficiency. Performance data in hardware acceleration solution data sheets (measured in TOPS (tera operations per second)) are not always a good representation of actual performance, as many hardware acceleration devices are underutilized due to bottlenecks related to the device architecture. These data, measured in TOPS, emphasize the processing power of the accelerator's compute engine, but ignore key factors such as the batch size, speed, and size of external memory, and the ability to transfer data between external memory and the accelerator's compute engine. For ASR workloads, focusing on memory bandwidth and efficiently transferring data within the accelerator provides a stronger guide to achieving accelerator performance and efficiency.

Accelerators must have larger external memory sizes and very high bandwidth. Today's high-end accelerators typically use high-performance external memory with a storage size of 8-16 GB and a running speed of up to 4 Tbps. It must also be able to transmit this data to the computing platform without affecting performance. However, no matter how to implement the data channel between high-speed storage and the computing engine, it is almost always a bottleneck for system performance, especially in low-latency applications such as real-time ASR.

FPGAs are designed to provide optimal data routing between storage and compute, thus providing an excellent acceleration platform for these workloads.

Comparison of Achronix solutions with other FPGA solutions

In the field of machine learning (ML) acceleration, existing FPGA architectures claim to have inference speeds of up to 150 TOPS. However, in real-world applications, especially those that are latency-sensitive (such as ASR), these FPGAs are far from achieving their claimed maximum inference speeds due to the inability to efficiently transfer data between the computing platform and external memory. This performance loss is caused by a bottleneck when data is transferred from external memory to the computing engine in the FPGA device. The Achronix Speedster7t architecture strikes a good balance between the computing engine, high-speed memory interface, and data transfer, allowing Speedster7t FPGA devices to provide high performance for real-time, low-latency ASR workloads, achieving 64% of the maximum TOPS rate.

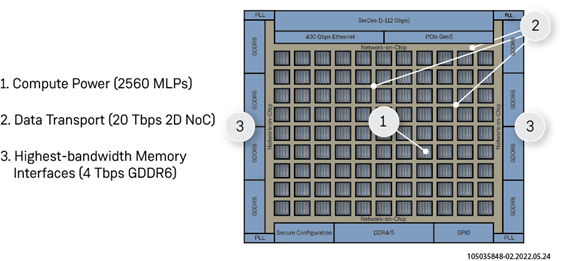

Figure 2: Computation, storage, and data transfer capabilities of the Speedster7t device

How the Speedster7t architecture achieves higher computing efficiency

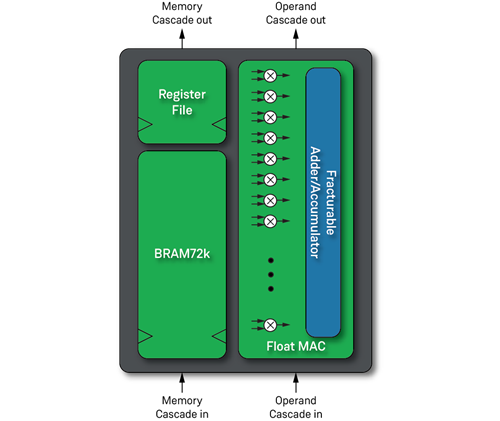

The Machine Learning Processor (MLP) on the Speedster7t is an optimized matrix/vector multiplication module that can perform 32 multiplications and 1 accumulation in a single clock cycle and is the foundation of the compute engine architecture. The Block RAM (BRAM) in the AC7t1500 device is co-located with all 2560 MLP instances, which means lower latency and higher throughput.

With these key architectural units, Myrtle.ai’s MAU low-latency, high-throughput ML inference engine has been integrated into the Speedster7t FPGA device.

When building the best ASR solution, the previously mentioned MAU inference engine from Myrtle.ai was integrated, using 2000 of the 2560 MLPs. Since the MLP is a hard module, it can run at a higher clock rate than the FPGA logic array itself.

Figure 3: Machine learning processor

Eight GDDR6 memory controllers are used in the AC7t1500 device, which together provide up to 4 Tbps of bidirectional bandwidth. As mentioned above, powerful computing engines and large-capacity, high-bandwidth storage rely on high-speed, low-latency, and deterministic data transfer to provide the real-time results required by low-latency ASR applications.

This data then enters the Speedster7t's two-dimensional network-on-chip (2D NoC). The 2D NoC is another hard structure in the Speedster7t architecture that clocks at up to 2 GHz and interconnects all I/Os, internal hard blocks, and the FPGA logic array itself. With a total bandwidth of 20 Tbps, the 2D NoC provides the highest throughput and, with the proper implementation, the most deterministic, low-latency data transfer between external GDDR6 memory and the MLP-enabled compute engines.

Previous article:Habana Gaudi2 outperforms NVIDIA A100, helping to achieve efficient AI training

Next article:Codasip Joins Intel Pathfinder for RISC-V Design Support Program

- Popular Resources

- Popular amplifiers

- Huawei's Strategic Department Director Gai Gang: The cumulative installed base of open source Euler operating system exceeds 10 million sets

- Analysis of the application of several common contact parts in high-voltage connectors of new energy vehicles

- Wiring harness durability test and contact voltage drop test method

- Sn-doped CuO nanostructure-based ethanol gas sensor for real-time drunk driving detection in vehicles

- Design considerations for automotive battery wiring harness

- Do you know all the various motors commonly used in automotive electronics?

- What are the functions of the Internet of Vehicles? What are the uses and benefits of the Internet of Vehicles?

- Power Inverter - A critical safety system for electric vehicles

- Analysis of the information security mechanism of AUTOSAR, the automotive embedded software framework

Professor at Beihang University, dedicated to promoting microcontrollers and embedded systems for over 20 years.

Professor at Beihang University, dedicated to promoting microcontrollers and embedded systems for over 20 years.

- Innolux's intelligent steer-by-wire solution makes cars smarter and safer

- 8051 MCU - Parity Check

- How to efficiently balance the sensitivity of tactile sensing interfaces

- What should I do if the servo motor shakes? What causes the servo motor to shake quickly?

- 【Brushless Motor】Analysis of three-phase BLDC motor and sharing of two popular development boards

- Midea Industrial Technology's subsidiaries Clou Electronics and Hekang New Energy jointly appeared at the Munich Battery Energy Storage Exhibition and Solar Energy Exhibition

- Guoxin Sichen | Application of ferroelectric memory PB85RS2MC in power battery management, with a capacity of 2M

- Analysis of common faults of frequency converter

- In a head-on competition with Qualcomm, what kind of cockpit products has Intel come up with?

- Dalian Rongke's all-vanadium liquid flow battery energy storage equipment industrialization project has entered the sprint stage before production

- Allegro MicroSystems Introduces Advanced Magnetic and Inductive Position Sensing Solutions at Electronica 2024

- Car key in the left hand, liveness detection radar in the right hand, UWB is imperative for cars!

- After a decade of rapid development, domestic CIS has entered the market

- Aegis Dagger Battery + Thor EM-i Super Hybrid, Geely New Energy has thrown out two "king bombs"

- A brief discussion on functional safety - fault, error, and failure

- In the smart car 2.0 cycle, these core industry chains are facing major opportunities!

- The United States and Japan are developing new batteries. CATL faces challenges? How should China's new energy battery industry respond?

- Murata launches high-precision 6-axis inertial sensor for automobiles

- Ford patents pre-charge alarm to help save costs and respond to emergencies

- New real-time microcontroller system from Texas Instruments enables smarter processing in automotive and industrial applications

- Summary of issues in design and installation of grounding devices

- Common C language errors in MCU development

- How to load BIT into FPGA via network port?

- MSP430F series MCU study notes_library function programming ideas

- MSP430F5529 Evaluation Summary

- This is the leakage current detection circuit provided by ROHM Semiconductor

- Zhouyi Compass Simulation Experiment 1——Environment

- I would like to ask if any of you experts have made a LED controller for a lottery turntable, or can help develop such a controller 18588252018

- 【AT-START-F425 Review】Review Summary

- What does the EAS test of the chip mean and what is its function? Please give me some advice

Multi-port and shared memory architecture for high-performance ADAS SoCs

Multi-port and shared memory architecture for high-performance ADAS SoCs Machine Learning: Architecture in the Age of Artificial Intelligence

Machine Learning: Architecture in the Age of Artificial Intelligence

京公网安备 11010802033920号

京公网安备 11010802033920号