Three major product divisions covering thousands of industries

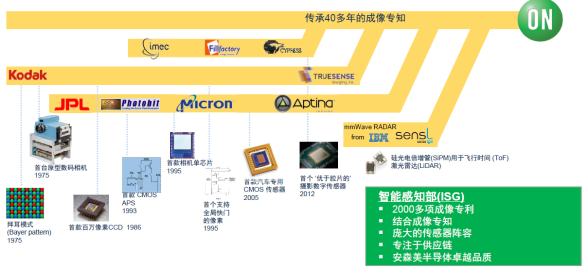

ON Semiconductor has three major product divisions: Power Solutions Group (PSG), Advanced Solutions Group (ASG), and Intelligent Sensing Group (ISG). Among them, the ISG department is the fastest growing. Although it has only a six-year history in ON Semiconductor, through mergers and acquisitions, the technology iteration speed of ON Semiconductor's ISG department is very fast.

ON Semiconductor ISG: The Inventor of the Modern Image Sensor

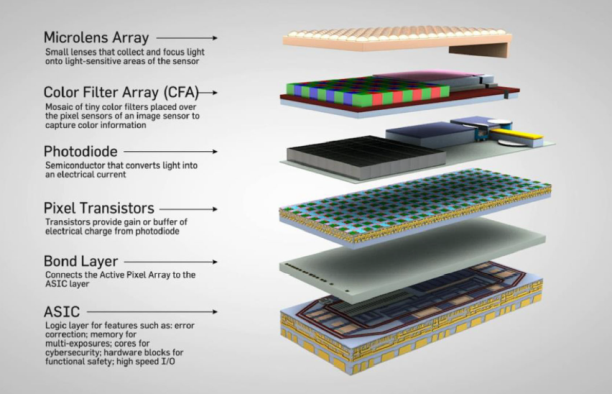

The format name "Bayer pattern" of RGB (red, green and blue) in the field of image sensors is named after an employee of ON Semiconductor. The world's first CMOS image sensor was developed by ON Semiconductor in 1993. Global shutter technology was also first developed by ON Semiconductor. Today, ON Semiconductor's global shutter technology has entered the eighth generation of products, while similar companies are still in the third and fourth generations.

Automotive, machine vision and edge artificial intelligence are the three major areas that the ISG department focuses on.

1. Intelligent driving: With the development of electrification and intelligence, the application of intelligent sensing technology in automobiles is growing rapidly;

2. Machine vision: Industrial machine vision and edge AI are widely used. With the development of artificial intelligence in recent years, industrial machine vision has received more and more attention from the industry. In addition to the development of traditional factory automation and smart factories, machine vision is also developing in the fields of smart transportation, new retail, smart buildings/homes, robots (drones, service robots, industrial robots, delivery robots, collaborative robots), etc. At present, ON Semiconductor has become a leader in industrial machine vision with its comprehensive product lineup.

3. Edge AI: With the continuous development of AI technology, 5G, and IoT, cloud, physical, and edge computing have also emerged. The computing speed of the cloud is very high, and the four steps of perception, recognition or judgment, decision-making, and action are all processed in the cloud. However, the problem is that it is very costly to send a large amount of data to the cloud, and the latency is not good.

ON Semiconductor has a full range of intelligent sensing solutions from ultrasound, imaging, millimeter wave radar, Lidar to sensors, among which the automotive sensing field has the largest share.

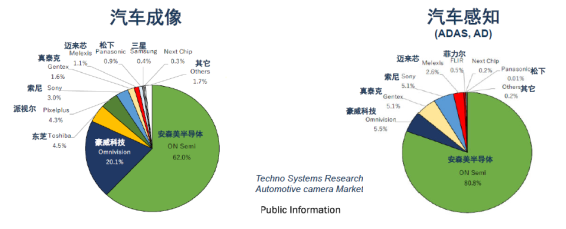

ON Semiconductor dominates the automotive image sensor market

ON Semiconductor image sensors have a market share of more than 80% in the ADAS and AD fields. It is understood that they have shipped about 100 million sensors in 2019. Last year, ON Semiconductor launched the next-generation RGB-IR image sensor solution for in-cabin applications and the Hayabusa™ series of CMOS image sensors for advanced driver assistance systems (ADAS) and automotive camera vision systems. It is reported that the Hayabusa platform adopts a breakthrough 3.0-micron back-illuminated pixel design, providing the industry's highest 100,000 electron charge capacity, and has other key automotive features, such as synchronized on-chip high dynamic range (HDR), LED flicker elimination (LFM), and real-time functional safety and automotive-grade certification.

Yi Jihui, vice president of global marketing and application engineers at ON Semiconductor's Intelligent Sensing Division, said that the increasing intelligence of automobiles has led to more and more extensive applications of sensors in automobiles, and the demand for the types and quantity of sensors in automobiles has also continued to increase. It is precisely because of this trend that intelligence, miniaturization, multi-function and integration have gradually become the mainstream direction of the development of automotive sensor technology. Today, the optimal perception system of automobiles has far exceeded human perception and is working tirelessly.

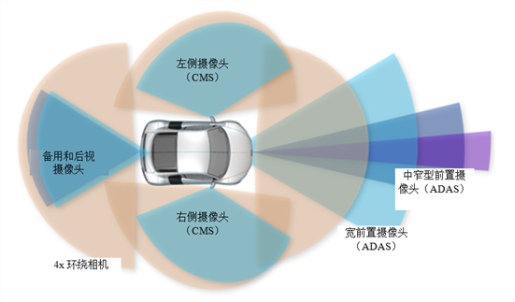

Camera-based active safety systems are rapidly increasing in cars today. In addition to backup cameras, our cars may also have:

Surround view system using four cameras

ADAS front camera system with up to three cameras

Rearview mirror assist camera

Surveillance Camera

Driver surveillance camera

Side view camera

From 2017 onwards, many new car models will have more than a dozen cameras to help improve safety.

Safety remains the top priority when driving

Although the world is optimistic about the development of autonomous driving, it is inevitable that there are still many controversies. Among them, the biggest controversy is the safety of autonomous driving. Yi Jihui said that the safety of autonomous driving can be divided into three main aspects:

1. Shared passengers

Our private cars are idle 95% of the time, and the life of a car is about 8,000 hours. When autonomous driving gradually matures, our private cars may become shared cars, and you only need to tell your destination, which greatly increases the efficiency of car use. This intensity was not planned in the design and development of previous cars. ON Semiconductor has been preparing in this direction, and its product designs are designed according to this requirement.

2. Functional safety

For autonomous driving or driver-assisted vehicles, most or almost all of the vehicle is controlled by the system, and the quality of the control effect must be considered. In the traditional automotive field, failure performance often stems from system failure. This is not the case in autonomous driving systems. Even if the system does not fail, the uncertainty of factors such as the output of the neural network black box may cause functional deviation and cause traffic damage. Although there may be no errors or failures at the perception and decision-making execution level, other complex traffic conditions and unexpected vehicle behaviors may still become unstable factors for the autonomous driving system.

3. Cybersecurity

On the road to fully autonomous driving, cybersecurity is a technical challenge that must be overcome. According to a report by research firm MarketsandMarkets, the global automotive industry cybersecurity market will grow at a CAGR (average annual growth rate) of 23.16% from 2018 to 2025, reaching a scale of US$5.77 billion by 2025.

Self-driving cars use sensors and ICT (Information and Communication Technology) terminals installed on the car to detect and analyze the surrounding environment to control the steering wheel and brakes. With the development of connected cars, various information (such as other vehicles and traffic infrastructure) will be sent and received in real time through the network. It is understood that self-driving cars will be able to process about 4TB of data every day. If the self-driving system is hacked, it will become a killing tool, and its harm is unimaginable.

Challenges of Automotive Imaging

In addition to safety, Yi Jihui also talked about the three major challenges faced by autonomous driving imaging, which are also the main directions to be overcome in the future:

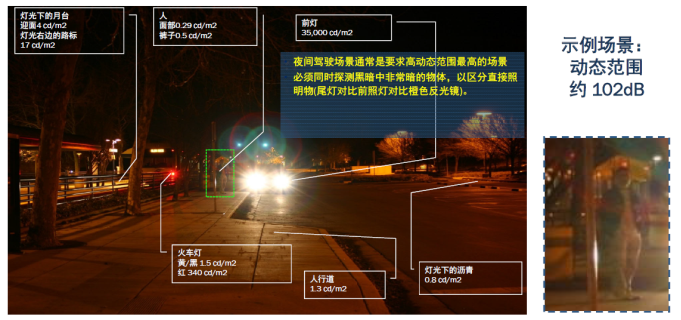

1. Ultra-wide dynamic range

Wide dynamic range refers to the difference between light and dark in a picture. In real scenes, there is a wide range of brightness changes, and if the dynamic range of the imager is not enough to capture the scene, it will result in information loss. The ability of a sensor to capture the dark and bright parts of an image with the highest fidelity is its dynamic range. The higher the dynamic range, the higher the fidelity of the optical image transformation from the scanned object. If the wide dynamic range is not enough, all scenes cannot be clearly identified during the conversion process from dark to light or from light to dark. To give a simple example, when using a mobile phone to shoot outside the window from indoors, the outdoor scenery is generally clear, but the indoor shooting effect is pitch black. If the indoor scene is clear, the effect outside the window is blank. The reason is that the wide dynamic range of the mobile phone is not enough.

Yi Jihui emphasized that wide dynamic range is crucial for autonomous driving. If the dynamic range is not in place, the image presented will be very different. A typical case is the tunnel wide dynamic test. When the wide dynamic range is not good, the image outside the tunnel is blank, and even the details on the tunnel wall are blurred. The human eye can recognize it, but the machine vision cannot. On the contrary, a higher dynamic range will see most of the details clearly.

2. Extreme conditions with a wide temperature range (-40C to +105C)

The car must be built to ensure that it can run normally under any environmental conditions.

3. LED traffic lights/sign lights flash and are difficult to distinguish

Thanks to the energy-saving and clear advantages of LED, all major traffic intersections have been equipped with new equipment, but it is a bit difficult for sensors to identify it. This is because the flashing frequency of LED is different. If the frequency cannot be synchronized, the image sensor will not be able to capture it.

In the face of these challenges, image sensors are constantly improving in technology. Compared with CPUs and GPUs, image sensor technology is more complex. Yi Jihui introduced that how to achieve a perfect combination of electrons and photons is an extremely complex semiconductor process. As a key factor in machine vision design, both speed and resolution boundaries must be rapidly transformed. This allows for higher frame rates without compromising image quality. Moreover, in order to achieve resolution, image sensors must have a powerful optical format to ensure the imaging details and performance required for modern machine vision and detection use cases.

Hayabusa Super Exposure High Dynamic Pixel

In the face of extreme temperature conditions, high dynamic range, LED flicker, functional safety and NCAP evaluation standards, ON Semiconductor's

The Hayabusa image sensor platform is a breakthrough in automotive image perception systems. The Hayabusa image sensor platform has been expanded to offer a range of resolutions and imaging capabilities, including configuration options with embedded image signal processing. The 3.1 megapixel (MP) configuration and unique 8:3 aspect ratio make it suitable for wide field of view applications such as ADAS front vision to meet the European New Car Assessment Program (NCAP) 2020 standards. The 1.3 MP configuration provides a sensor and image signal processor in a single compact automotive-grade package, which is ideal for applications with limited camera body space such as megapixel reversing cameras, surround view systems and automatic parking systems.

This high charge capacity pixel design enables each image sensor in the Hayabusa series to provide super exposure capabilities, providing high-fidelity images with 120 decibel (dB) HDR and LFM without sacrificing low-light sensitivity, even in the most challenging scenes. The Hayabusa platform's simultaneous HDR and LFM capabilities are particularly important for improving safety, as they ensure that all objects and potential hazards can be identified in extremely dark and extremely bright scenes.

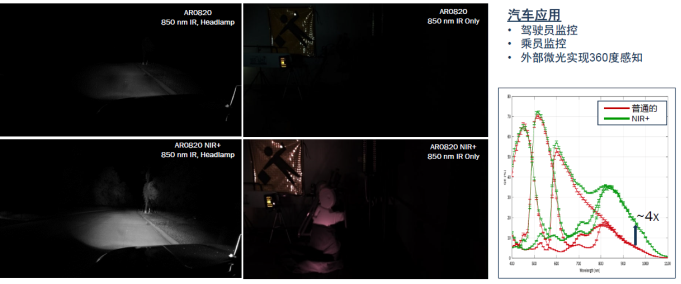

NIR+ Pixel Technology

In addition to wide dynamic range, cars have very high requirements for near infrared when driving at night. ON Semiconductor's near infrared + (NIR +) pixel technology increases near infrared sensitivity by about 4 times, ensuring comprehensive signal capture, improving signal clarity, and saving power. The new NIR + improves the quantum efficiency (QE) of the sensor in the near infrared region without sacrificing color fidelity in the visible spectrum. Its process level is 4 times that of the original NIR, which allows the machine to see more information at night.

ON Semiconductor Automotive Perception + Vision Overall Solution

ON Semiconductor provides a variety of products from low-end to high-end for customers to choose from in the fields of vision + perception, ADAS + autonomous driving, global shutter/in-cabin, edge + SPU, radar/lidar, etc.

The development of automobile intelligence is mainly in three directions: artificial vision outside the car, including surround view and rear view; machine vision outside the car, including ADAS and autonomous driving; in-cabin monitoring, in addition to monitoring the driver, there is also monitoring of passengers. ON Semiconductor's imaging technology will continue to work hard in these areas to bring better products to customers.

The smart cockpit also faces this big challenge. Yi Jihui introduced that the current camera size is too large (18×18cm3), and the smallest is 3×3cm3. ON Semiconductor and its partners have jointly developed smaller cameras, and now they have reached the level of 0.5×0.5cm3.

Previous article:MIIT: L1-L5 autonomous driving cannot be achieved overnight and must respect technical laws

Next article:ON Semiconductor: A full range of radar technology owners

- Popular Resources

- Popular amplifiers

-

Intelligent environmental perception technology for autonomous unmanned systems

Intelligent environmental perception technology for autonomous unmanned systems -

A review of deep learning applications in traffic safety analysis

A review of deep learning applications in traffic safety analysis -

Dual Radar: A Dual 4D Radar Multimodal Dataset for Autonomous Driving

Dual Radar: A Dual 4D Radar Multimodal Dataset for Autonomous Driving -

A review of learning-based camera and lidar simulation methods for autonomous driving systems

A review of learning-based camera and lidar simulation methods for autonomous driving systems

- Car key in the left hand, liveness detection radar in the right hand, UWB is imperative for cars!

- After a decade of rapid development, domestic CIS has entered the market

- Aegis Dagger Battery + Thor EM-i Super Hybrid, Geely New Energy has thrown out two "king bombs"

- A brief discussion on functional safety - fault, error, and failure

- In the smart car 2.0 cycle, these core industry chains are facing major opportunities!

- The United States and Japan are developing new batteries. CATL faces challenges? How should China's new energy battery industry respond?

- Murata launches high-precision 6-axis inertial sensor for automobiles

- Ford patents pre-charge alarm to help save costs and respond to emergencies

- New real-time microcontroller system from Texas Instruments enables smarter processing in automotive and industrial applications

- Innolux's intelligent steer-by-wire solution makes cars smarter and safer

- 8051 MCU - Parity Check

- How to efficiently balance the sensitivity of tactile sensing interfaces

- What should I do if the servo motor shakes? What causes the servo motor to shake quickly?

- 【Brushless Motor】Analysis of three-phase BLDC motor and sharing of two popular development boards

- Midea Industrial Technology's subsidiaries Clou Electronics and Hekang New Energy jointly appeared at the Munich Battery Energy Storage Exhibition and Solar Energy Exhibition

- Guoxin Sichen | Application of ferroelectric memory PB85RS2MC in power battery management, with a capacity of 2M

- Analysis of common faults of frequency converter

- In a head-on competition with Qualcomm, what kind of cockpit products has Intel come up with?

- Dalian Rongke's all-vanadium liquid flow battery energy storage equipment industrialization project has entered the sprint stage before production

- Allegro MicroSystems Introduces Advanced Magnetic and Inductive Position Sensing Solutions at Electronica 2024

- Car key in the left hand, liveness detection radar in the right hand, UWB is imperative for cars!

- After a decade of rapid development, domestic CIS has entered the market

- Aegis Dagger Battery + Thor EM-i Super Hybrid, Geely New Energy has thrown out two "king bombs"

- A brief discussion on functional safety - fault, error, and failure

- In the smart car 2.0 cycle, these core industry chains are facing major opportunities!

- The United States and Japan are developing new batteries. CATL faces challenges? How should China's new energy battery industry respond?

- Murata launches high-precision 6-axis inertial sensor for automobiles

- Ford patents pre-charge alarm to help save costs and respond to emergencies

- New real-time microcontroller system from Texas Instruments enables smarter processing in automotive and industrial applications

- Low-Cost Digital Thermometer Using a Single-Chip Microcontroller

- [GD32E503 Review] Simple Oscilloscope Experiment (Continued 2)

- Application of TPS61046 dual output in optical communication

- A generation of "mobile phone overlord" may lay off 10,000 employees. Someone around you must have used its mobile phone!

- High-speed circuit design

- The pin is floating but the voltage is still output?

- [Environmental Expert's Smart Watch] Part 11: Watch Binding and Unbinding

- TMS320F28335 Study Notes - Startup Process

- Fish Tank Controller - Supplementary Video

- [RVB2601 Creative Application Development] Handheld Game Console (4) Jump with one click

Intelligent environmental perception technology for autonomous unmanned systems

Intelligent environmental perception technology for autonomous unmanned systems

京公网安备 11010802033920号

京公网安备 11010802033920号