Manufacturing engineers are facing increasing complexity with the latest 802.11ac standard, which in turn has driven them to innovate their test strategies to meet these newly emerging requirements. First and foremost, testing devices in the 5GHz band with higher bandwidths and modulation orders means purchasing new equipment for the factory. However, the problem is further complicated by the fact that devices using today's latest technology also need to perform backward compatibility testing for legacy technology standards (802.11a/b/g/n). With this trend in mind, engineers are developing test strategies by intelligently adding test items that can help achieve a specific test coverage in their test plans. This article takes a deep dive into the smart test approach for 802.11ac devices and uses it to answer the following question:

"If I were asked to test an 802.11ac device to ensure good product quality, how many items would really need to be tested during production?"

Straight to the "low, medium, high" approach

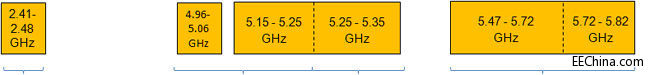

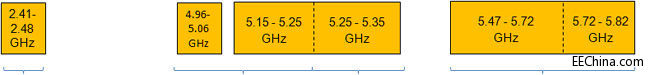

Previously, we usually implemented the "low, medium, high" approach to verify 2.4GHz devices. This means testing the lowest, middle, and highest frequencies (channels) within the supported frequency range. This straightforward approach seems practical for the 5 GHz band, but changes when you consider test coverage. Referring to Figure 1, we first notice that the 5 GHz band covers much more spectrum than the 2.4 GHz ISM band, which has a bandwidth of less than 100 MHz. Second, chips that support operation at 5 GHz are usually operated separately on sub-bands with separate verification information. This division into sub-bands is caused by the different maximum transmit powers in different frequency ranges, and also because it is difficult to produce a set of calibration parameters that can support the entire 5 GHz band. Obviously, the "low, medium, high" approach does not provide sufficient test coverage for these sub-bands.

Figure 1: Overview of the 802.11 spectrum in the 2.4/5 GHz bands (including 802.11ac)

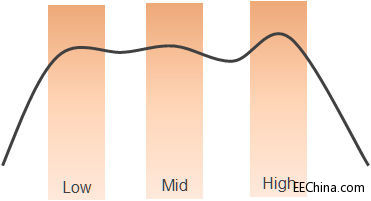

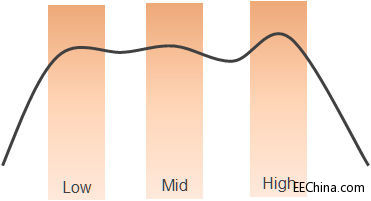

If you ignore the 5 GHz requirements for the moment, it makes sense to test the low, mid, and high frequencies in the 2.4 GHz band. The approach of testing at the two extreme points and the center point ensures that the DUT has the same behavioral characteristics on all channels; in fact, this process can also verify the flatness of the entire frequency range with some simple post-processing. Referring to Figure 2, it can be seen that there is a possibility of missing defects and only seeing two test points, for example, at the band edge where unexpected filter mismatches or roll-offs often occur. This defect scenario is the underlying reason why we recommend using three test points in the 2.4 GHz band. In addition, this underlying strategy is the basis for our consideration of test coverage in the 5 GHz band.

Figure 2: Unexpected filter response misses defect points, resulting in only two test points.

Workaround: Chip calibration

A valuable insight into test coverage considerations is that chip vendors typically provide a fixed calibration table for the transmitter and receiver, which in most cases tells the device how to operate within the specified power limits. Typically, the calibration table includes information about typical gain over frequency and power information for the transmitter and receiver. While such tables exist, it is best practice for manufacturing to consider this basic calibration operation as part of test coverage. The most

common calibration process consists of two steps that are traceable to power measurements:

(1) calibrate the transmit power of the DUT;

(2) transmit the calibration information to the receiver.

In this frequency-by-frequency measurement approach, the power calibration process verifies the performance parameters and adds the final data to the calibration table.

Does 5 GHz mean a different test process?

Test coverage for the 5 GHz band follows the same strategy as for 2.4 GHz. Calibration for the 5 GHz band is more important due to the higher frequency operation, especially since 5 GHz covers much more frequency space (including the sub-band decomposition) than 2.4 GHz. Another consideration is that the band select filters for 5GHz are not aligned to each sub-band, but to the covered frequencies of all sub-bands.

In addition, calibration is more complex for 5GHz. The reason for dividing the calibration range into multiple sub-bands is that a calibration process covering the entire 5GHz band is difficult to implement, especially because different sub-bands usually have different transmit target powers. Testing a single frequency point within a sub-band (especially if it is the same frequency as the calibration process) provides little additional information. For example, this simple approach will not detect filter roll-offs at the band edges or power rises and falls across the sub-bands.

These operational and calibration characteristics are the main differences that the new test coverage must deal with.

5GHz Validation

Validation is the process of confirming that a device can operate properly on the frequencies it supports. Applying a 2.4GHz test strategy to the 5GHz band involves performing low-frequency, mid-frequency, and high-frequency validations for each sub-band of the 5GHz band. Similar to the calibration process, the validation process also starts with the validation of the transmit function and then the validation of the receive function. Depending on the priorities of the production process, it may be wise to test the low, mid, and high frequencies across the entire 5 GHz band, but it may be better to test at a certain frequency point within each subband. The latter will reduce test time, but will not detect many manufacturing defects, only failures in overall performance.

Further examination of the test coverage of the "low, mid, high" approach on the subbands can provide opportunities to improve the test plan. At first glance, it seems a bit excessive to obtain data at low, mid, and high frequency points on each subband. The purpose of examining the middle point in the 2.4 GHz band is to detect filter mismatch, but this same middle point defect mechanism does not exist in the 5 GHz subband. Similarly, the low and high frequency points are not close to the subband edges, which is more interesting for verification operations. With this observation in mind, it can be seen that test coverage can be improved by measuring the two extreme points of each subband - especially if the subbands are calibrated using the curve centering method (in which the middle point is usually used for centering).

As this initial look at test coverage tells us, the combined results of the DUT characteristics and defect mechanisms are influencing our test item selection process. By adjusting test coverage to accommodate these influencing factors, manufacturing engineers will be able to accurately select test items to ensure product quality. In general, transmit and receive functions represent logical categories of test coverage that we recommend. Similarly, test coverage will include logical categories of modulation and throughput. In this way, the collection of all test items that can fully verify the device's operational performance while screening for product defects becomes the optimal test coverage. This process will serve as the basis for organizing the rest of this article.

When testing enters the receive characterization stage, the target test can additionally increase filter ripple and other frequency-dependent indicators. In this approach, we should include the edge frequency points of the entire band and several other frequency points distributed over the band. It is recommended to take at least one test frequency per sub-band, but it should be noted that it does not make sense to use the same frequency as in the transmit test process. The general principle is to avoid testing the performance of the receiver at two nearly identical frequency points; for example, the sub-band edge bands are usually adjacent. Thus, a judicious selection of frequency points allows us to discover product defects in a shorter test time.

Where to test?

In summary, the 2.4 GHz band takes advantage of existing test coverage. The 5 GHz band requires emission verification on the extreme channels supported by each chip sub-band, which can be seen as a new test item for 5 GHz. For example, the three sub-bands will require at least six frequency values (the extreme channels of each sub-band) for transmitter testing to ensure flat power calibration of each sub-band and frequency performance at the band edges. Similarly, the receiver will benefit from measurements at the highest and lower band frequencies (band edges) as well as several other frequencies distributed across the band. As a practical compromise, we can also take only one frequency point in each sub-band (minimum), that is, 3-5 frequencies in the end; this is sufficient in most cases. These test items form a solid frequency foundation for test coverage of transmitter and receiver functions. [page]

All of the above statements naturally assume that the chip has been carefully characterized and that we can choose the ideal frequencies to test based on this analysis data.

What to test?

The next question to consider is what to verify at each selected frequency with so many possible test conditions. Test coverage must be expanded upon the existing 2.4 GHz test plan to meet the requirements of 802.11ac. The existing test base may include tests to verify power, EVM, and mask at maximum data rates at low, mid, and high frequencies. In addition to DSSS modulated signals, our first task is to add OFDM modulated signals to the test coverage. Manufacturing engineers typically test OFDM modulated signals at the same frequencies with similar test parameters. It is important to note during calibration that since in many cases DSSS and OFDM use different calibration parameters (one parameter is offset by a fixed amount from the other), it is necessary to verify the calibration amount for both methods. Fortunately, DSSS is not supported in the 5 GHz band, so this test item is not necessary. On the other hand, many other transmit bandwidths and modulation methods are supported - especially after the introduction of the 802.11ac standard.

The best test coverage should consider the conditions of the test object

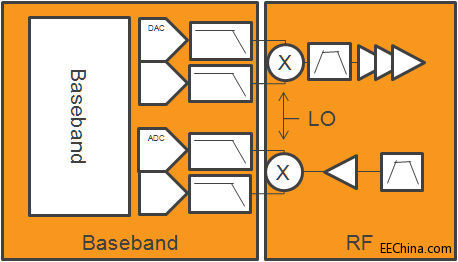

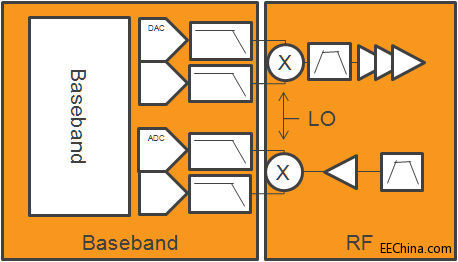

Figure 3: Simplified schematic of a typical transceiver

. As shown in Figure 3, a typical transceiver usually consists of a transmitter and a receiver, both of which consist of a baseband section and RF section. From a test perspective, this structure is important for test coverage considerations.

Transmitter Test Considerations

In the transmitter, the modulated signal is generated at baseband in the form of IQ signals. Once the signal passes through the necessary anti-aliasing filters, the antenna transmits the RF signal after up-conversion and some signal conditioning functions (i.e. gain control, band selection filters). The transmit chain may consist of a single chip; more commonly, it may consist of a transceiver chip and a front-end module (FEM). The

following insights about transmit are valuable for test coverage considerations:

* At baseband, the DUT has a significant characteristic that the modulated signal is independent of the transmit frequency.

* At baseband, the number of carriers increases in direct proportion to the bandwidth they occupy and the anti-aliasing filters are adjusted accordingly.

* At baseband and RF, the bandwidth is proportional to the baseband signal.

* At RF, quadrature (IQ) impairments can be compensated for by correction factors (usually in time vs. frequency format).

* At RF, the modulated signal can be simplified to RF power over a given bandwidth.

* At RF, IQ mismatch and phase noise add to the signal in the same way, independent of frequency.

Transmitter test coverage verifies the operation of the DUT and finds defects, which should be the primary goal of testing. The large number of operating conditions can make the test time to obtain measurement results too long, so the challenge is to find a way to remove overlapping conditions so that the test time is reduced to a more manageable level. This process of removing overlapping conditions will require the use of the transmitter knowledge mentioned above, which is also useful for optimizing test coverage.

A wise test strategy will consider using different modulation methods at different frequencies to emphasize the DUT's contribution. This strategy is in stark contrast to the brute-force approach of repeatedly using the same data rate at different frequencies. Additionally, as long as the transmit target power remains constant, a smart test approach would consider testing the EVM values of different modulations at the most stringent EVM requirement for the modulation; for example, in the 11n standard, a 6Mbps OFDM signal should be tested at the 54Mbps test limit or msc7.

Further test optimization can be achieved by varying the packet payload length. In this test scenario, varying the payload length of the packets at the lower data rates to ensure the same duration (not the same payload) is a smart trick to measure settling time. This approach also ensures the same thermal conditions, which can also help identify other types of defects.

Another smart technique to optimize test coverage is to measure the transmit power at a given frequency with signals of different bandwidths to achieve the same power measurement. This technique emphasizes that the role of the anti-aliasing filter must be varied according to the specific situation. There is an assumption here that the transmitter output characteristics are constant, regardless of the occupied bandwidth: the narrowband example is just a subset of the extreme wideband case. Of course, testing at exactly the same frequency is problematic, but the bandwidth of the signal should cover the desired frequencies (e.g., a 40MHz signal will be offset by 10MHz relative to a 20MHz signal of the same frequency).

Spectral mask measurements become more difficult as the bandwidth of the modulated signal increases. This difficulty is caused by the fact that the total transmitted power is spread across multiple carriers (BW), which reduces the signal-to-noise ratio of each carrier, moving the overall mask closer to the noise floor. Therefore, at the highest bandwidth, test coverage will increase the number of critical mask measurements.

By using these clever techniques, test coverage can be increased without increasing the number of test items. In this way, test coverage can reveal the basic performance of the transmitter.

Receiver Test Considerations

In the RF section (see Figure 3), the antenna receives the external RF modulated signal and conditions it. After down-conversion using an orthogonal structure, the baseband section analyzes the resulting IQ signal. The receive chain may consist of a single chip or (more commonly) a transceiver chip and a front-end module (FEM).

The following insights about receive are valuable in considering test coverage:

* At RF, the downconverter does not perform any explicit channel selection, so all signals will be downconverted in the same way.

* At RF, the only real impact comes from the receiver noise figure, which affects all signals (just as if there were no noise filtering before baseband).

* At both RF and baseband, the effects of IQ mismatch and phase noise have the greatest impact on the highest order modulation schemes, and reducing input power with lower data rates does not ensure that higher order modulation schemes will work. * At both RF and baseband, group

delay issues caused by channel select filters have the greatest impact at higher data rates. *

At baseband, the number of carriers increases in direct proportion to the occupied bandwidth. * At baseband,

the effect of channel select filters will vary depending on the selected standard, but the signal processing will not be aware of the RF frequency. * At baseband,

reducing the data rate will not only reduce the required signal-to-noise ratio, but will also make the receiver more tolerant to other impairments.

To date, transmit test coverage has been provided for nine frequencies: three at 2.4 GHz and six at 5 GHz. If the same approach is used, the receiver test coverage will include seven frequencies (three at 2.4 GHz and four at 5 GHz).

In contrast to the transmit path, receiver test coverage requires variable data rates when verifying operational performance and extracting performance metrics. Therefore, testing items at the highest data rate and up to three bandwidths are necessary to ensure that the IQ mismatch and phase noise metrics are not flawed. This approach can also verify that the channel selection filter does not cause other impairments.

Using this 5 GHz guideline, it is easy to select three frequencies from the four frequencies. The first frequency is the highest frequency at the highest data rate, which ensures that it is tested at the highest phase noise. If we can assume that the IQ mismatch value is acceptable at a specific frequency, then the remaining two frequencies can be used for functional testing (if this is not the case, then we need to test all frequencies). It should be pointed out again that we should select one of the basic data rates to measure (preferably the ACK rate); so 24M and 1M should be two of the remaining four frequencies because they are critical to the basic operational performance of the device. The last two frequencies can be used to test 11n, and the testing can be carried out at the traditional rate, the Green field rate, and other different basic rates. Such as QPSK and BPSK.

By using these clever techniques, test coverage can be improved without increasing the number of test items. In this way, test coverage can reveal the basic performance of the receiver.

Data rate selection

From the above discussion, it is clear that avoiding the traditional approach of testing the required frequencies at the highest data rate can bring us significant benefits. In other words, judicious selection of multiple data rates and bandwidths helps test the operation performance of more modes while verifying the performance indicators of the DUT. In the end, we will achieve greater test coverage with the least test items. Here lies the value orientation of manufacturing engineers when thinking about the test methods of emerging 802.11ac devices. The

following suggestions are related to the selection of data rates.

Naturally, we should test the highest order modulation method for each bandwidth, so for 802.11ac chips, three of the nine frequencies are for 11ac. We should test MSC9 at the highest frequency as the phase noise increases with frequency to truly test the worst case under the conditions where the EVM requirements are met. This is to ensure that the baseband anti-aliasing filter does not affect the transmission quality. However, the other two bandwidths may be lower 11ac rates. To ensure the normal operation performance of the legacy, we should also test the backward compatibility modes, namely 54M, MCS7 and 11M DSSS. We can easily test MSC7 at 40MHz. This means that there are three sets of modulation and frequency combinations left. These items are just right for testing the following three standard acknowledgment (ACK) rates: usually 24Mbps, MSC4, and 1Mbps. Please note that these test items may vary depending on the implementation. Of the remaining two 11ac rates, we should also test the ACK rate; finally, perhaps the lowest rate should be tested. Of course, among the above modulation methods, 11M and 1M must be tested on 2.4GHz, and the last frequency may be the most challenging rate in the 2.4GHz band, such as MSC7 at the highest frequency.

Summary

As mentioned above, adding 5GHz test content to the existing 2.4GHz band can create new opportunities for improving test coverage. 5GHz does increase the workload of testing, but through the analysis of test coverage, we find that the increase is not much. In addition, through the understanding of basic measurement items, the increase in the number of tests can improve test coverage. For transmitter testing, we can choose different modulation schemes but still maintain the existing coverage. For receive testing, the benefit is not as dramatic, but fewer test points are actually needed, and we can test more modulations than we could on a specific number of test items in the traditional approach.

Keywords:802 11ac 5GHz

Reference address:A smart approach to testing 802.11ac 5GHz devices in production

"If I were asked to test an 802.11ac device to ensure good product quality, how many items would really need to be tested during production?"

Straight to the "low, medium, high" approach

Previously, we usually implemented the "low, medium, high" approach to verify 2.4GHz devices. This means testing the lowest, middle, and highest frequencies (channels) within the supported frequency range. This straightforward approach seems practical for the 5 GHz band, but changes when you consider test coverage. Referring to Figure 1, we first notice that the 5 GHz band covers much more spectrum than the 2.4 GHz ISM band, which has a bandwidth of less than 100 MHz. Second, chips that support operation at 5 GHz are usually operated separately on sub-bands with separate verification information. This division into sub-bands is caused by the different maximum transmit powers in different frequency ranges, and also because it is difficult to produce a set of calibration parameters that can support the entire 5 GHz band. Obviously, the "low, medium, high" approach does not provide sufficient test coverage for these sub-bands.

Figure 1: Overview of the 802.11 spectrum in the 2.4/5 GHz bands (including 802.11ac)

If you ignore the 5 GHz requirements for the moment, it makes sense to test the low, mid, and high frequencies in the 2.4 GHz band. The approach of testing at the two extreme points and the center point ensures that the DUT has the same behavioral characteristics on all channels; in fact, this process can also verify the flatness of the entire frequency range with some simple post-processing. Referring to Figure 2, it can be seen that there is a possibility of missing defects and only seeing two test points, for example, at the band edge where unexpected filter mismatches or roll-offs often occur. This defect scenario is the underlying reason why we recommend using three test points in the 2.4 GHz band. In addition, this underlying strategy is the basis for our consideration of test coverage in the 5 GHz band.

Figure 2: Unexpected filter response misses defect points, resulting in only two test points.

Workaround: Chip calibration

A valuable insight into test coverage considerations is that chip vendors typically provide a fixed calibration table for the transmitter and receiver, which in most cases tells the device how to operate within the specified power limits. Typically, the calibration table includes information about typical gain over frequency and power information for the transmitter and receiver. While such tables exist, it is best practice for manufacturing to consider this basic calibration operation as part of test coverage. The most

common calibration process consists of two steps that are traceable to power measurements:

(1) calibrate the transmit power of the DUT;

(2) transmit the calibration information to the receiver.

In this frequency-by-frequency measurement approach, the power calibration process verifies the performance parameters and adds the final data to the calibration table.

Does 5 GHz mean a different test process?

Test coverage for the 5 GHz band follows the same strategy as for 2.4 GHz. Calibration for the 5 GHz band is more important due to the higher frequency operation, especially since 5 GHz covers much more frequency space (including the sub-band decomposition) than 2.4 GHz. Another consideration is that the band select filters for 5GHz are not aligned to each sub-band, but to the covered frequencies of all sub-bands.

In addition, calibration is more complex for 5GHz. The reason for dividing the calibration range into multiple sub-bands is that a calibration process covering the entire 5GHz band is difficult to implement, especially because different sub-bands usually have different transmit target powers. Testing a single frequency point within a sub-band (especially if it is the same frequency as the calibration process) provides little additional information. For example, this simple approach will not detect filter roll-offs at the band edges or power rises and falls across the sub-bands.

These operational and calibration characteristics are the main differences that the new test coverage must deal with.

5GHz Validation

Validation is the process of confirming that a device can operate properly on the frequencies it supports. Applying a 2.4GHz test strategy to the 5GHz band involves performing low-frequency, mid-frequency, and high-frequency validations for each sub-band of the 5GHz band. Similar to the calibration process, the validation process also starts with the validation of the transmit function and then the validation of the receive function. Depending on the priorities of the production process, it may be wise to test the low, mid, and high frequencies across the entire 5 GHz band, but it may be better to test at a certain frequency point within each subband. The latter will reduce test time, but will not detect many manufacturing defects, only failures in overall performance.

Further examination of the test coverage of the "low, mid, high" approach on the subbands can provide opportunities to improve the test plan. At first glance, it seems a bit excessive to obtain data at low, mid, and high frequency points on each subband. The purpose of examining the middle point in the 2.4 GHz band is to detect filter mismatch, but this same middle point defect mechanism does not exist in the 5 GHz subband. Similarly, the low and high frequency points are not close to the subband edges, which is more interesting for verification operations. With this observation in mind, it can be seen that test coverage can be improved by measuring the two extreme points of each subband - especially if the subbands are calibrated using the curve centering method (in which the middle point is usually used for centering).

As this initial look at test coverage tells us, the combined results of the DUT characteristics and defect mechanisms are influencing our test item selection process. By adjusting test coverage to accommodate these influencing factors, manufacturing engineers will be able to accurately select test items to ensure product quality. In general, transmit and receive functions represent logical categories of test coverage that we recommend. Similarly, test coverage will include logical categories of modulation and throughput. In this way, the collection of all test items that can fully verify the device's operational performance while screening for product defects becomes the optimal test coverage. This process will serve as the basis for organizing the rest of this article.

When testing enters the receive characterization stage, the target test can additionally increase filter ripple and other frequency-dependent indicators. In this approach, we should include the edge frequency points of the entire band and several other frequency points distributed over the band. It is recommended to take at least one test frequency per sub-band, but it should be noted that it does not make sense to use the same frequency as in the transmit test process. The general principle is to avoid testing the performance of the receiver at two nearly identical frequency points; for example, the sub-band edge bands are usually adjacent. Thus, a judicious selection of frequency points allows us to discover product defects in a shorter test time.

Where to test?

In summary, the 2.4 GHz band takes advantage of existing test coverage. The 5 GHz band requires emission verification on the extreme channels supported by each chip sub-band, which can be seen as a new test item for 5 GHz. For example, the three sub-bands will require at least six frequency values (the extreme channels of each sub-band) for transmitter testing to ensure flat power calibration of each sub-band and frequency performance at the band edges. Similarly, the receiver will benefit from measurements at the highest and lower band frequencies (band edges) as well as several other frequencies distributed across the band. As a practical compromise, we can also take only one frequency point in each sub-band (minimum), that is, 3-5 frequencies in the end; this is sufficient in most cases. These test items form a solid frequency foundation for test coverage of transmitter and receiver functions. [page]

All of the above statements naturally assume that the chip has been carefully characterized and that we can choose the ideal frequencies to test based on this analysis data.

What to test?

The next question to consider is what to verify at each selected frequency with so many possible test conditions. Test coverage must be expanded upon the existing 2.4 GHz test plan to meet the requirements of 802.11ac. The existing test base may include tests to verify power, EVM, and mask at maximum data rates at low, mid, and high frequencies. In addition to DSSS modulated signals, our first task is to add OFDM modulated signals to the test coverage. Manufacturing engineers typically test OFDM modulated signals at the same frequencies with similar test parameters. It is important to note during calibration that since in many cases DSSS and OFDM use different calibration parameters (one parameter is offset by a fixed amount from the other), it is necessary to verify the calibration amount for both methods. Fortunately, DSSS is not supported in the 5 GHz band, so this test item is not necessary. On the other hand, many other transmit bandwidths and modulation methods are supported - especially after the introduction of the 802.11ac standard.

The best test coverage should consider the conditions of the test object

Figure 3: Simplified schematic of a typical transceiver

. As shown in Figure 3, a typical transceiver usually consists of a transmitter and a receiver, both of which consist of a baseband section and RF section. From a test perspective, this structure is important for test coverage considerations.

Transmitter Test Considerations

In the transmitter, the modulated signal is generated at baseband in the form of IQ signals. Once the signal passes through the necessary anti-aliasing filters, the antenna transmits the RF signal after up-conversion and some signal conditioning functions (i.e. gain control, band selection filters). The transmit chain may consist of a single chip; more commonly, it may consist of a transceiver chip and a front-end module (FEM). The

following insights about transmit are valuable for test coverage considerations:

* At baseband, the DUT has a significant characteristic that the modulated signal is independent of the transmit frequency.

* At baseband, the number of carriers increases in direct proportion to the bandwidth they occupy and the anti-aliasing filters are adjusted accordingly.

* At baseband and RF, the bandwidth is proportional to the baseband signal.

* At RF, quadrature (IQ) impairments can be compensated for by correction factors (usually in time vs. frequency format).

* At RF, the modulated signal can be simplified to RF power over a given bandwidth.

* At RF, IQ mismatch and phase noise add to the signal in the same way, independent of frequency.

Transmitter test coverage verifies the operation of the DUT and finds defects, which should be the primary goal of testing. The large number of operating conditions can make the test time to obtain measurement results too long, so the challenge is to find a way to remove overlapping conditions so that the test time is reduced to a more manageable level. This process of removing overlapping conditions will require the use of the transmitter knowledge mentioned above, which is also useful for optimizing test coverage.

A wise test strategy will consider using different modulation methods at different frequencies to emphasize the DUT's contribution. This strategy is in stark contrast to the brute-force approach of repeatedly using the same data rate at different frequencies. Additionally, as long as the transmit target power remains constant, a smart test approach would consider testing the EVM values of different modulations at the most stringent EVM requirement for the modulation; for example, in the 11n standard, a 6Mbps OFDM signal should be tested at the 54Mbps test limit or msc7.

Further test optimization can be achieved by varying the packet payload length. In this test scenario, varying the payload length of the packets at the lower data rates to ensure the same duration (not the same payload) is a smart trick to measure settling time. This approach also ensures the same thermal conditions, which can also help identify other types of defects.

Another smart technique to optimize test coverage is to measure the transmit power at a given frequency with signals of different bandwidths to achieve the same power measurement. This technique emphasizes that the role of the anti-aliasing filter must be varied according to the specific situation. There is an assumption here that the transmitter output characteristics are constant, regardless of the occupied bandwidth: the narrowband example is just a subset of the extreme wideband case. Of course, testing at exactly the same frequency is problematic, but the bandwidth of the signal should cover the desired frequencies (e.g., a 40MHz signal will be offset by 10MHz relative to a 20MHz signal of the same frequency).

Spectral mask measurements become more difficult as the bandwidth of the modulated signal increases. This difficulty is caused by the fact that the total transmitted power is spread across multiple carriers (BW), which reduces the signal-to-noise ratio of each carrier, moving the overall mask closer to the noise floor. Therefore, at the highest bandwidth, test coverage will increase the number of critical mask measurements.

By using these clever techniques, test coverage can be increased without increasing the number of test items. In this way, test coverage can reveal the basic performance of the transmitter.

Receiver Test Considerations

In the RF section (see Figure 3), the antenna receives the external RF modulated signal and conditions it. After down-conversion using an orthogonal structure, the baseband section analyzes the resulting IQ signal. The receive chain may consist of a single chip or (more commonly) a transceiver chip and a front-end module (FEM).

The following insights about receive are valuable in considering test coverage:

* At RF, the downconverter does not perform any explicit channel selection, so all signals will be downconverted in the same way.

* At RF, the only real impact comes from the receiver noise figure, which affects all signals (just as if there were no noise filtering before baseband).

* At both RF and baseband, the effects of IQ mismatch and phase noise have the greatest impact on the highest order modulation schemes, and reducing input power with lower data rates does not ensure that higher order modulation schemes will work. * At both RF and baseband, group

delay issues caused by channel select filters have the greatest impact at higher data rates. *

At baseband, the number of carriers increases in direct proportion to the occupied bandwidth. * At baseband,

the effect of channel select filters will vary depending on the selected standard, but the signal processing will not be aware of the RF frequency. * At baseband,

reducing the data rate will not only reduce the required signal-to-noise ratio, but will also make the receiver more tolerant to other impairments.

To date, transmit test coverage has been provided for nine frequencies: three at 2.4 GHz and six at 5 GHz. If the same approach is used, the receiver test coverage will include seven frequencies (three at 2.4 GHz and four at 5 GHz).

In contrast to the transmit path, receiver test coverage requires variable data rates when verifying operational performance and extracting performance metrics. Therefore, testing items at the highest data rate and up to three bandwidths are necessary to ensure that the IQ mismatch and phase noise metrics are not flawed. This approach can also verify that the channel selection filter does not cause other impairments.

Using this 5 GHz guideline, it is easy to select three frequencies from the four frequencies. The first frequency is the highest frequency at the highest data rate, which ensures that it is tested at the highest phase noise. If we can assume that the IQ mismatch value is acceptable at a specific frequency, then the remaining two frequencies can be used for functional testing (if this is not the case, then we need to test all frequencies). It should be pointed out again that we should select one of the basic data rates to measure (preferably the ACK rate); so 24M and 1M should be two of the remaining four frequencies because they are critical to the basic operational performance of the device. The last two frequencies can be used to test 11n, and the testing can be carried out at the traditional rate, the Green field rate, and other different basic rates. Such as QPSK and BPSK.

By using these clever techniques, test coverage can be improved without increasing the number of test items. In this way, test coverage can reveal the basic performance of the receiver.

Data rate selection

From the above discussion, it is clear that avoiding the traditional approach of testing the required frequencies at the highest data rate can bring us significant benefits. In other words, judicious selection of multiple data rates and bandwidths helps test the operation performance of more modes while verifying the performance indicators of the DUT. In the end, we will achieve greater test coverage with the least test items. Here lies the value orientation of manufacturing engineers when thinking about the test methods of emerging 802.11ac devices. The

following suggestions are related to the selection of data rates.

Naturally, we should test the highest order modulation method for each bandwidth, so for 802.11ac chips, three of the nine frequencies are for 11ac. We should test MSC9 at the highest frequency as the phase noise increases with frequency to truly test the worst case under the conditions where the EVM requirements are met. This is to ensure that the baseband anti-aliasing filter does not affect the transmission quality. However, the other two bandwidths may be lower 11ac rates. To ensure the normal operation performance of the legacy, we should also test the backward compatibility modes, namely 54M, MCS7 and 11M DSSS. We can easily test MSC7 at 40MHz. This means that there are three sets of modulation and frequency combinations left. These items are just right for testing the following three standard acknowledgment (ACK) rates: usually 24Mbps, MSC4, and 1Mbps. Please note that these test items may vary depending on the implementation. Of the remaining two 11ac rates, we should also test the ACK rate; finally, perhaps the lowest rate should be tested. Of course, among the above modulation methods, 11M and 1M must be tested on 2.4GHz, and the last frequency may be the most challenging rate in the 2.4GHz band, such as MSC7 at the highest frequency.

Summary

As mentioned above, adding 5GHz test content to the existing 2.4GHz band can create new opportunities for improving test coverage. 5GHz does increase the workload of testing, but through the analysis of test coverage, we find that the increase is not much. In addition, through the understanding of basic measurement items, the increase in the number of tests can improve test coverage. For transmitter testing, we can choose different modulation schemes but still maintain the existing coverage. For receive testing, the benefit is not as dramatic, but fewer test points are actually needed, and we can test more modulations than we could on a specific number of test items in the traditional approach.

Previous article:LTE protocol stack software analysis and testing methods

Next article:LeCroy's new automotive bus SENT test solution

Recommended ReadingLatest update time:2024-11-16 13:48

How to ensure good product quality of 802.11ac equipment and what testing methods are available

Manufacturing engineers are facing increasing complexity with the latest 802.11ac standard, which in turn is driving them to continually innovate test strategies to meet these emerging requirements. First, and most importantly, testing equipment at higher bandwidths and modulation orders in the 5GHz band means purchas

[Test Measurement]

- Popular Resources

- Popular amplifiers

Recommended Content

Latest Test Measurement Articles

- Keysight Technologies Helps Samsung Electronics Successfully Validate FiRa® 2.0 Safe Distance Measurement Test Case

- From probes to power supplies, Tektronix is leading the way in comprehensive innovation in power electronics testing

- Seizing the Opportunities in the Chinese Application Market: NI's Challenges and Answers

- Tektronix Launches Breakthrough Power Measurement Tools to Accelerate Innovation as Global Electrification Accelerates

- Not all oscilloscopes are created equal: Why ADCs and low noise floor matter

- Enable TekHSI high-speed interface function to accelerate the remote transmission of waveform data

- How to measure the quality of soft start thyristor

- How to use a multimeter to judge whether a soft starter is good or bad

- What are the advantages and disadvantages of non-contact temperature sensors?

MoreSelected Circuit Diagrams

MorePopular Articles

- Innolux's intelligent steer-by-wire solution makes cars smarter and safer

- 8051 MCU - Parity Check

- How to efficiently balance the sensitivity of tactile sensing interfaces

- What should I do if the servo motor shakes? What causes the servo motor to shake quickly?

- 【Brushless Motor】Analysis of three-phase BLDC motor and sharing of two popular development boards

- Midea Industrial Technology's subsidiaries Clou Electronics and Hekang New Energy jointly appeared at the Munich Battery Energy Storage Exhibition and Solar Energy Exhibition

- Guoxin Sichen | Application of ferroelectric memory PB85RS2MC in power battery management, with a capacity of 2M

- Analysis of common faults of frequency converter

- In a head-on competition with Qualcomm, what kind of cockpit products has Intel come up with?

- Dalian Rongke's all-vanadium liquid flow battery energy storage equipment industrialization project has entered the sprint stage before production

MoreDaily News

- Allegro MicroSystems Introduces Advanced Magnetic and Inductive Position Sensing Solutions at Electronica 2024

- Car key in the left hand, liveness detection radar in the right hand, UWB is imperative for cars!

- After a decade of rapid development, domestic CIS has entered the market

- Aegis Dagger Battery + Thor EM-i Super Hybrid, Geely New Energy has thrown out two "king bombs"

- A brief discussion on functional safety - fault, error, and failure

- In the smart car 2.0 cycle, these core industry chains are facing major opportunities!

- The United States and Japan are developing new batteries. CATL faces challenges? How should China's new energy battery industry respond?

- Murata launches high-precision 6-axis inertial sensor for automobiles

- Ford patents pre-charge alarm to help save costs and respond to emergencies

- New real-time microcontroller system from Texas Instruments enables smarter processing in automotive and industrial applications

Guess you like

- 【Silicon Labs Development Kit Review】+ Unboxing Experience

- IIC Timing Diagram

- Please recommend a 5V to positive and negative 15V op amp power supply circuit or chip to share

- Lock-in Amplifier Design

- EEWORLD University ----RISC-V 5th workshop

- What is transparent transmission and point-to-multipoint transmission? What are their classic applications and advantages?

- How to build an undervoltage protection circuit using discrete components

- [Mill MYB-YT507 development board trial experience] + some problems in the compilation experience

- Like this BOOST boost topology, if a lithium battery 4.2V & 1000mA is used, the load current is a momentary signal (no need to...

- [NXP Rapid IoT Review] + When connecting to a device via mobile app, the message "Unable to verify the device's hardware credentials" appears

西门子S7-12001500 PLC SCL语言编程从入门到精通 (北岛李工)

西门子S7-12001500 PLC SCL语言编程从入门到精通 (北岛李工)

京公网安备 11010802033920号

京公网安备 11010802033920号