Analysis of the working principle of CMOS/CCD image sensor

Source: InternetPublisher:刘德华河北分华 Keywords: Sensor CMOS CCD Updated: 2025/01/14

Whether it is CCD or CMOS, they all use sensors as the basic means of capturing images. The core of CCD/CMOS sensors is the photographic diode (photodiode). The diode can generate an output current after receiving light, and the intensity of the current is the same as the intensity of light. However, the CCD sensor and CMOS sensor are different in the periphery. In addition to the photosensitive diode, which includes a storage unit for controlling adjacent charges, the photosensitive diode occupies most of the area of the sensor - in other words, the CCD sensor has a larger effective photosensitive area, and can receive strong light signals under the same conditions, and the corresponding output signal is clearer.

Moreover, the structure of CMOS sensors is relatively complex. In addition to the core position of the photosensitive diode, it also includes amplifiers and analog conversion circuits, which make each pixel into a photosensitive diode and three transistors. The area occupied by the photosensitive diode is only a small part of the entire component, resulting in the aperture rate of CMOS sensors being much lower than that of CCD (aperture rate: the ratio of effective photosensitive area to sensor area).

In this case, the light signal that the CMOS sensor can capture is significantly smaller than that of the CCD element, and the sensitivity is lower. When reflected at the output end, the image content captured by the CMOS sensor is richer than that of the CCD sensor, and some image details and obvious noise are lost, which is a big reason why the early CMOS sensors can only be used in low places.

Another problem caused by the low aperture ratio of CMOS is that it cannot be compared with the pixel density of CCD, because as the density increases, the proportion of the sensor area will become narrower, and if the aperture ratio of CMOS is too low, a tiny effective photosensitive area will lose some image details, which is serious. Therefore, under the premise of the same sensor size, the pixel size of CCD is always higher than that of CMOS sensor of the same period, which is also one of the important reasons why CMOS has not been able to enter the mainstream digital camera market for a long time.

Each sensor has an image point of the image sensor. Because the sensor can only sense the intensity of light and cannot capture color information, a color filter must be covered on the sensor. Different sensor manufacturers have different solutions for this. The most common way is to cover RGB. The red, green, and blue filters are composed of four points in a 1:2:1 composition to form a color pixel (that is, the red and blue filters cover one image point respectively, and the remaining two image points cover the green filter). This ratio is the reason why the human eye is very sensitive to green. Sony's four-color CCD technology uses the emerald green (emerald in English, some media call it the E channel) in the green filter to form a new R, G, B, and E color scheme. Regardless of the technology used, it is important to clearly define the four pixels that make up the color pixel in advance.

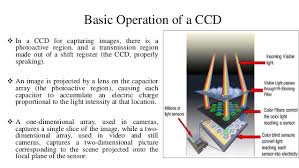

After receiving light, the photosensitive element generates a corresponding current, and the magnitude of the current corresponds to the light intensity, thus simulating the direct output of the photosensitive element. In the CCD sensor, each sensor does not need further processing, but directly outputs it to the next sensor. The analog signal generated by the sensor-combining element of the storage unit is then output to the third sensor, and so on, until it is combined with the last sensor signal to form a unified output. Since the electrical signal generated by the sensor is too weak to be directly converted into analog to digital, the output data must be unified during the amplification process - this task is completed by the CCD sensor. After the amplifier processes the amplifier, the amplitude signal strength of each image point is also increased; but since the CCD itself cannot directly convert the analog signal into a digital signal, a special analog-to-digital conversion chip is also required for processing, and the final output is provided to the dedicated DSP chip in the form of a binary digital image matrix.

For CMOS sensors, the above workflow is completely inapplicable. The CMOS sensor of each sensor directly integrates the amplifier and analog-to-digital conversion logic. When the photodiode emits light, an analog signal is generated. The signal is first amplified by the amplifier to the sensor, and then directly converted into the corresponding digital signal. In other words, in a CMOS sensor, each sensor can produce the final digital output, and the combined digital signal is directly processed by the DSP chip - the problem is that the simulator belonging to the CMOS sensor amplifier cannot guarantee that each amplification point is kept strictly, and the amplified image data cannot represent the appearance of the object being photographed - reflected in the final output result. In the case of large noise, the image quality is significantly lower than that of the CCD sensor.

- What are the types of commonly used batteries?

- What is a D flip-flop and how does it work?

- Important things to know about PCB routing and how to design the right routing for your PCB

- Ceramic filter structure/working principle/characteristics/application

- How to install the accelerometer

- Structural diagram and function of sliding resistor

- What types of force sensors are there?

- Make a simple AM radio with digital circuit

- AD108 frequency multiplier capable of inputting asymmetric square wave

- Demonstration device for capacitor charging and discharging process

- X-Class CMOS sensor, do you know it?

- Phase locked loop integrated circuit timer

- CCD image signal processing circuit for digital camera

- Interface circuit between CMOS and PMOS a

- Hybrid Power Amplifier 01

- CMOS to Triac Interface Circuit

- Backup power supply for permanent CMOS RAM

- Using CMOS to form a time-adjusting welding machine circuit-a

- TTL to CMOS level converter

- NMOS-CMOS interface circuit

京公网安备 11010802033920号

京公网安备 11010802033920号