How to use Tesseract for optical character recognition on Raspberry Pi

Source: InternetPublisher:无人共我 Keywords: Character Recognition OCR Updated: 2024/12/27

The ability of machines to observe the real world using cameras and interpret the data in it will have a greater impact on its applications. Whether it is a simple food delivery robot like the Starship robot or an advanced self-driving car like Tesla, they rely on information obtained from highly complex cameras to make decisions. In this tutorial, we will learn how to recognize details in an image by reading the characters on the image. This is called Optical Character Recognition (OCR).

This opens the door to many applications like automatically reading information from business cards, identifying stores from name plates or identifying sign boards on the road etc. Some of us may have already experienced these features through Google Lens, so today we will build something similar using the Optical Character Recognition (OCR) tool from Google Tesseract-OCR engine along with python and OpenCV to recognize characters in pictures using Raspberry Pi.

Raspberry pi is a portable and lower power device used in many real-time image processing applications like face detection, object tracking, home security systems, surveillance cameras, etc.

Prerequisites

As mentioned earlier, we will use the OpenCV library to detect and recognize faces. So, before proceeding with this tutorial, make sure you install the OpenCV library on your Raspberry Pi. You can also use a 2A adapter to power your Pi and connect it to a display monitor for easy debugging.

This tutorial will not explain how OpenCV works, if you are interested in learning image processing, check out this OpenCV basics and advanced image processing tutorials. You can also learn about contours, blob detection, and more in this image segmentation tutorial using OpenCV.

Installing Tesseract on Raspberry Pi

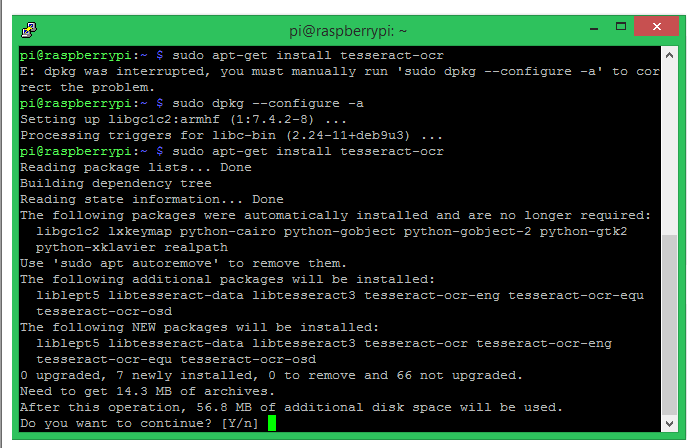

To perform Optical Character Recognition on Raspberry Pi, we have to install Tesseract OCR engine on Pi. To do this, we have to first configure Debian package (dpkg), which will help us install Tesseract OCR. Use the following command in the terminal window to configure Debian Package.

sudo dpkg - -configure -a

Then we can go ahead and install Tesseract OCR (Optical Character Recognition) using apt-get option. The command for the same is given below.

sudo apt-get install tesseract-ocr

Your terminal window will look like this and the installation will take about 5-10 minutes to complete.

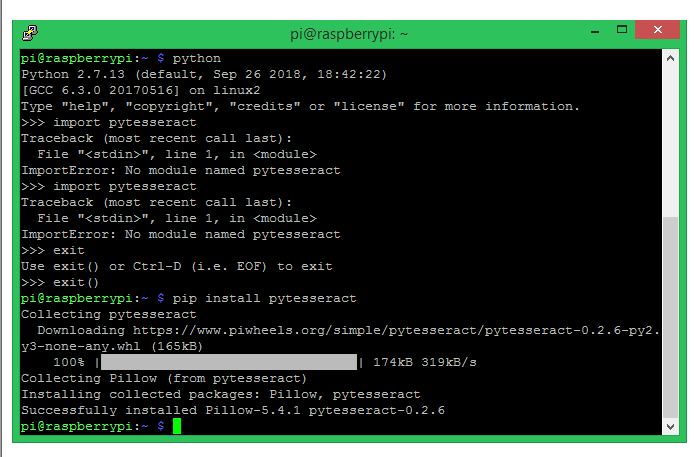

Now that we have installed Tesseract OCR, we have to install PyTesseract package using pip install package. Pytesseract is a python wrapper around tesseract OCR engine which helps us to use tesseract with python. Follow the following command to install pytesseract on python.

pip install pytesseract

Before you proceed with this step, make sure you have pillow installed. Those who have followed the Raspberry Pi Face Recognition tutorial should already have it installed. Others can use that tutorial and install it right away. Once pytesseract is installed, your window will look like this

Tesseract 4.0 on Windows/Ubuntu

The Tesseract optical character recognition project was originally started by Hewlett Packard in 1980 and then adopted by Google, where it remains today. Tesseract has evolved over the years, but it still only works well in controlled environments. If the image has too much background noise or is out of focus, tesseract does not seem to work properly.

To overcome this problem, the latest version of tesseract Tesseract 4.0 uses deep learning models to recognize characters and even handwriting. Tesseract 4.0 uses Long Short-Term Memory (LSTM) and Recurrent Neural Networks (RNN) to improve the accuracy of its OCR engine. Unfortunately, at this time of this tutorial, Tesseract 4.0 is only available for Windows and Ubuntu, but is still in beta for Raspberry Pi. So we decided to try Tesseract 4.0 on Windows and Tesseract 3.04 on Raspberry Pi.

Simple character recognition program on Pi

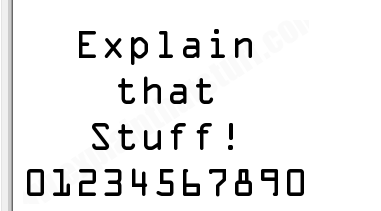

Since we already have Tesseract OCR and Pytesseract packages installed in our Pi, we can quickly write a small program to check how the character recognition works on a test image. The test image I used, the program, and the results can be found in the image below.

As you can see, the program is very simple and we have not even used any OpenCV package. The above program is given below

from PIL import Image

img = Image.open('1.png')

text = pytesseract.image_to_string(img, config='')

print (text)

In the above program, we are trying to read the text from an image named “1.png” which is located in the same directory of the program. Pillow package is used to open this image and save it under the variable name img. Then we use the image_to_sting method from the pytesseract package to detect any text in the image and save it as a string in the variable text. Finally we print the value of the text to check the result.

As you can see, the original image actually contains the text “Explain that stuff! 01234567890” which is a perfect test image because we have letters, symbols, and numbers in the image. But the output we get from pi is “Explain that stuff! Sdfosiefoewufv” which means the out program is not able to recognize any numbers in the image. To overcome this problem, people usually use OpenCV to remove the noise from the program and then configure the Tesseract OCR engine based on the image to get better results. But remember, you cannot expect 100% reliable output from Tesseract OCR Python.

Configuring Tesseract OCR to improve results

Pytesseract allows us to configure the Tesseract OCR engine by setting flags that change the way images are searched for characters. The three main flags used when configuring Tesseract OCR are language (-l), OCR engine mode (--oem), and page segmentation mode (--psm).

In addition to the default English, Tesseract supports many other languages including Hindi, Turkish, French, etc. We will only use English here, but you can download the training data from the official github page and add it to your package to recognize other languages. It is also possible to recognize two or more different languages from the same image. The language is set by the flag -l, to set it to a language, use the code and the flag, for example for English it would be -l eng, where eng is the code for English.

The next flag is the OCR Engine Mode, which has four different modes. Each mode uses a different algorithm to recognize characters in the image. By default, it uses the algorithm installed with the package. But we can change it to use LSTM or Neural Network. The four different engine modes are shown below. The flag is indicated by --oem, so to set it to mode 1, just use --oem 1.

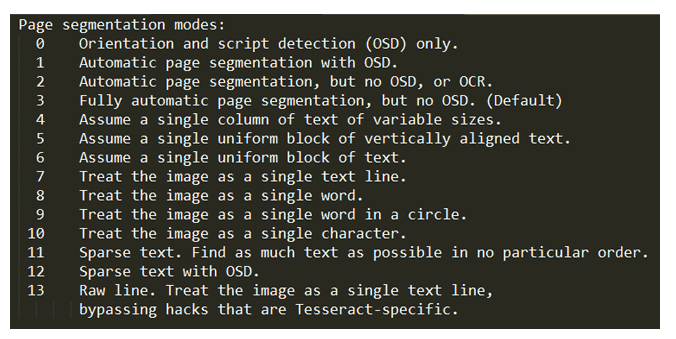

The last and most important flag is the page segmentation mode flag. These are very useful when you have an image with so much background detail and characters or letters written in different orientations or sizes. There are 14 different page segmentation modes, all of which are listed below. This flag is indicated by –psm, so set the mode to 11. It would be –psm 11.

Using OEM and PSM in Tesseract Raspberry Pi for better results

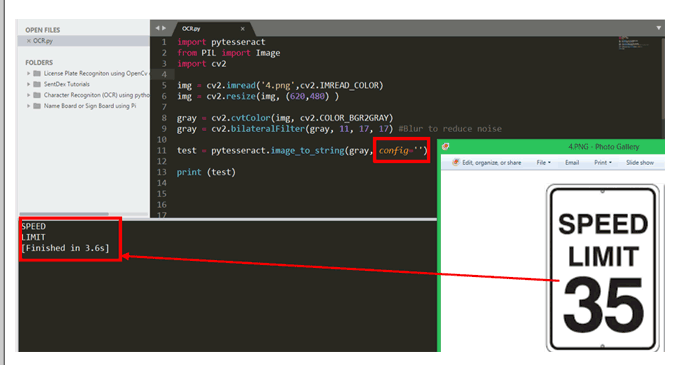

Let’s check the effectiveness of these configuration patterns. In the image below, I am trying to recognize the characters on a speed limit board which says “SPEED LIMIT 35”. As you can see, the size of the number 35 is larger compared to the other letters, which confuses Tesseract, so we get the output of only “SPEED LIMIT” and the number is missing.

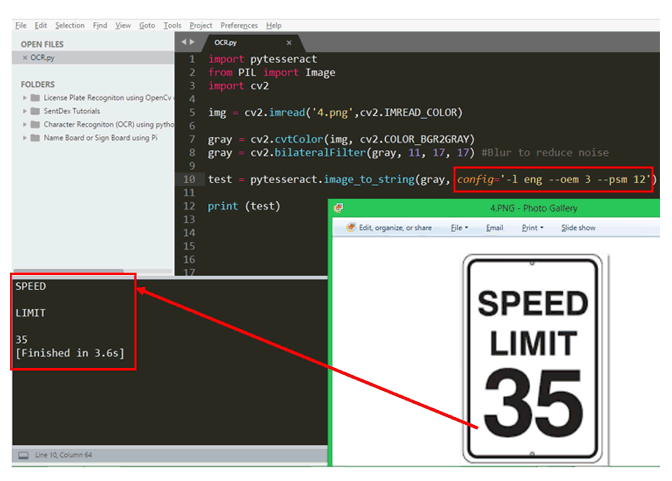

To overcome this we can set the configuration flags. In the above program, the configuration flag is empty config='', now let us set it using the details provided above. All the text in the image is in English so the language flag is -l eng and the OCR engine can be left in the default mode 3 so -oem 3. Now finally in psm mode, we need to find more characters from the image so we use mode 11 here which becomes –psm 11. The final configuration line will look like

test = pytesseract.image_to_string(grey, config='-l eng --oem 3 --psm 12')

The result for the same can be found below. As you can see now, Tesseract is able to find all the characters including numbers from the image.

Improving accuracy through confidence levels

Another interesting feature in Tesseract is the image_to_data method. This method can provide us with details such as the location of the character in the image, the confidence of the detection, the line and page number. Let's try to use it on a sample image

In this particular example, we get a lot of noisy information along with the original information. The image is a nameplate of a hospital called “Fortis Hospital”. But apart from the name, the image also has other background details like logo building etc. So Tesseract tries to convert everything into text and gives us a lot of noise like “$C” “|” “S_______S==+” etc.

Now in these cases the image_to_data method comes in handy. As you can see, the above optical character recognition algorithm returns the confidence of each character it has recognized, the confidence of Fortis is 64 and the confidence of HOSPITAL is 24. For other noise information, the confidence value is 10 or below greater than 10. In this way, we can filter out the useful information and use the confidence value to improve the accuracy.

OCR on Raspberry Pi

Although the results on Pi are not very satisfactory when using Tesseract, it can be combined with OpenCV to filter out noise in the image and other configuration techniques can be used to get decent results if the image is good. We have tried about 7 different images using tesseract on Pi and got close results by adjusting the mode for each picture accordingly. The complete project file can be downloaded as a Zip at this location which contains all the test images and basic code.

Let's try one more example board logo on the Raspberry Pi, this time it's pretty straightforward. The code for the same is given below

From PIL import pytesseract import image

Import cv2

img = cv2.imread('4.png', cv2.IMREAD_COLOR) #Open the image to recognize characters

#img = cv2.resize(img, (620, 480)) # Resize the image if necessary

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) #Convert to gray to reduce

Details gray = cv2.bilateralFilter(gray, 11, 17, 17) #Blur to reduce noise

original = pytesseract.image_to_string(gray, config='')

#test = (pytesseract.image_to_data(gray, lang=None, config='', nice=0) ) #get confidence level if required

#print(pytesseract.image_to_boxes(grey))

Print (original)

The program opens the file we need to recognize characters from and then converts it to grayscale. This will reduce the details in the image and make it easier for Tesseract to recognize the characters. To further reduce the background noise, we blur the image using a bilateral filter from OpenCV. Finally, we start recognizing characters from the image and print them on the screen. The final result will look like this.

Hope you understood this tutorial and enjoyed learning something new. OCR is used in many places like self-driving cars, license plate recognition, road sign recognition navigation, etc. Using it on Raspberry Pi opens the door to more possibilities as it can be portable and compact.

import pytesseract

from PIL import images

import cv2

img = cv2.imread('4.png',cv2.IMREAD_COLOR) #Open the image to recognize characters

#img = cv2.resize(img, (620,480) ) #Resize the image if needed

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) #Convert to gray to reduce details

gray = cv2.bilateralFilter(gray, 11, 17, 17) #Blur denoising

original = pytesseract.image_to_string(gray, config='')

#test = (pytesseract.image_to_data(gray, lang=None, config='', nice=0) ) #get confidence level if required

#print(pytesseract.image_to_boxes(gray))

print(original)

''' required = 'abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ'

final = ''

for original c:

for needed ch:

if c == ch:

final = final + c

rest

print(test)

in test:

if a == " ":

print("Found")'''

- DIY an electromagnetic glove

- Share an electronic touch organ circuit

- Using BMP280 to make a weather station

- How to Unlock Your Windows Laptop Using an RFID Tag

- Design of a tomato sorting machine based on Raspberry Pi

- Designing a Simple Solar Voltage Regulator PCB

- The production principle of high voltage self-defense flashlight and electric stick

- How to use RFID to create an automatic roll call attendance system

- Electronic mosquito repellent circuit diagram

- DIY a temperature and humidity monitoring system

- How does an optocoupler work? Introduction to the working principle and function of optocoupler

- 8050 transistor pin diagram and functions

- What is the circuit diagram of a TV power supply and how to repair it?

- Analyze common refrigerator control circuit diagrams and easily understand the working principle of refrigerators

- Hemisphere induction cooker circuit diagram, what you want is here

- Circuit design of mobile phone anti-theft alarm system using C8051F330 - alarm circuit diagram | alarm circuit diagram

- Humidity controller circuit design using NAND gate CD4011-humidity sensitive circuit

- Electronic sound-imitating mouse repellent circuit design - consumer electronics circuit diagram

- Three-digit display capacitance test meter circuit module design - photoelectric display circuit

- Advertising lantern production circuit design - signal processing electronic circuit diagram

京公网安备 11010802033920号

京公网安备 11010802033920号